liberia$prev <- liberia$npos/liberia$ntest

ggplot(liberia, aes(x = elevation, y = prev)) + geom_point() +

labs(x="Elevation (meters)",y="Prevalence")

| Function | R Package | Used for |

|---|---|---|

lmer |

lme4 |

Fitting linear mixed models |

glmer |

lme4 |

Fitting generalized linear mixed models |

glgpm |

RiskMap |

Fitting generalized linear geostatistical models |

s_variogram |

RiskMap |

Computing the empirical variogram and carrying out permutation test for spatial independence |

This chapter introduces the process of moving from exploratory analysis to the formal specification of statistical models, through to parameter estimation and interpretation of results. We outline the main steps involved, highlight the role of covariates, and present simple approaches for exploring and modelling non-linear relationships. We then turn to the assessment of residual spatial correlation, which motivates the use of geostatistical models, and describe how maximum likelihood methods can be applied for their estimation.

As illustrated in Figure 1.8, exploratory analysis is the first step in any statistical analysis. This stage is crucial for guiding how covariates should be incorporated into the model and for assessing whether the remaining unexplained variation exhibits spatial correlation. In the case of count data, this stage also involves examining the presence of overdispersion, a phenomenon that often arises when important covariates are omitted or when there is unaccounted heterogeneity in the data. In the following sections, we explain what overdispersion is in more detail and why checking for its presence is an essential prerequisite before fitting geostatistical models to count data.

Assessment of the association between the health outcome of interest and non-categorical (i.e. continuous) risk factors can be carried out using graphical tools, such as scatter plots. The graphical inspection of the empirical association between the outcome and the covariates is especially useful to identify non-linear patterns in the relationship which should then be accounted for in the model formulation.

In this section, we focus on the case where the observed outcome is a count, which requires a different treatment from continuously measured outcomes. The latter have been extensively discussed in the statistical literature and are covered in most standard textbooks (see, for example, Chapter 1 of Weisberg (2014)).

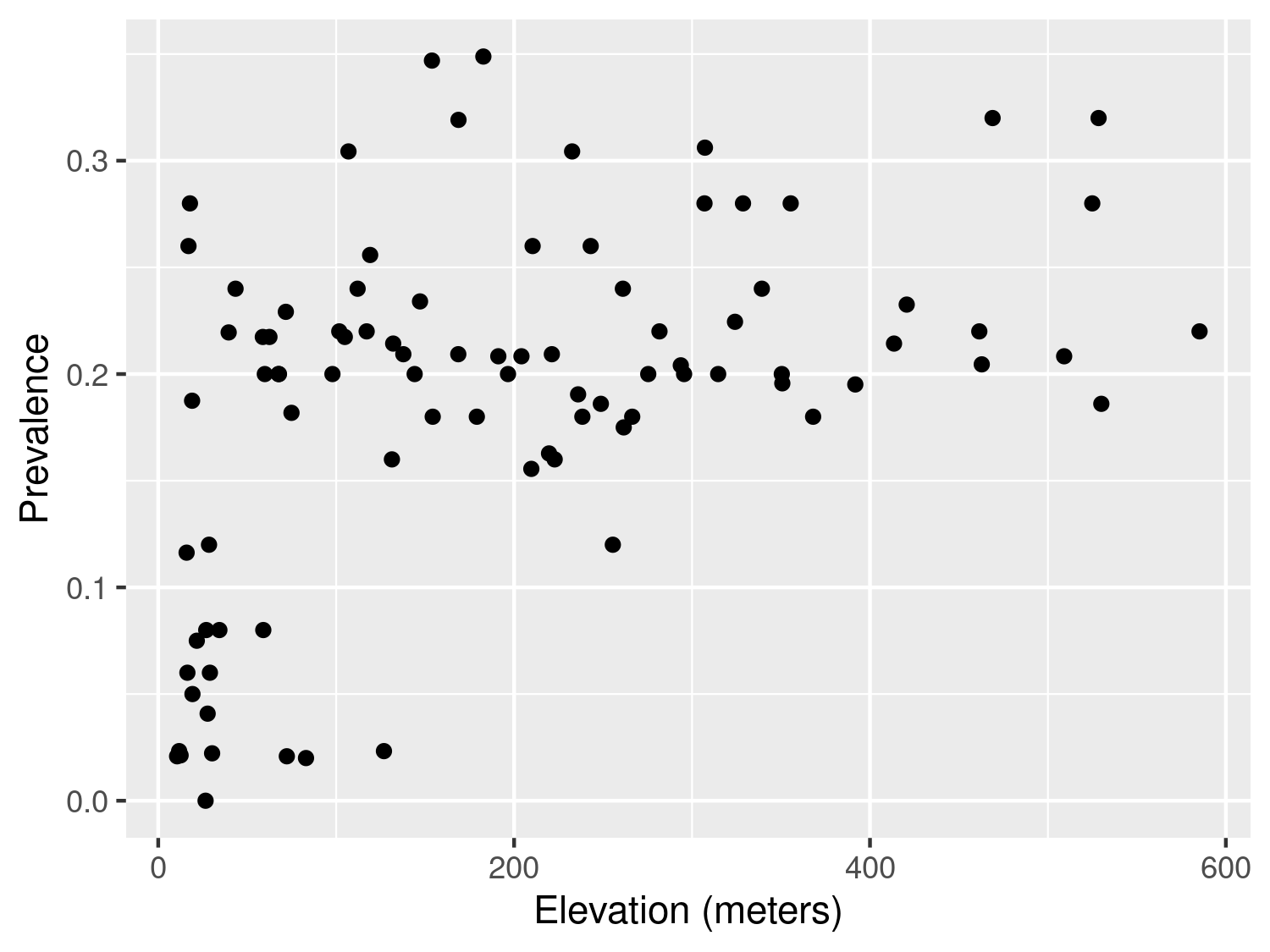

Let us first consider the example of the river-blindness data in Liberia (Section 1.4.2), and examine the association between prevalence and elevation. We first generate a plot of the prevalence against the measured elevation at each of the sample locations

liberia$prev <- liberia$npos/liberia$ntest

ggplot(liberia, aes(x = elevation, y = prev)) + geom_point() +

labs(x="Elevation (meters)",y="Prevalence")

The plot shown in Figure 3.1 shows that, as elevation increases from 0 to around 150 meters, prevalence rapidly increases to around 0.25 and, for larger values in elevation than 150 meters, the relationship levels off. How can we account for this in a regression model? To answer this question rigorously, the plot in Figure 3.1 cannot be used. This is because, when the modelled outcome is a bounded Binomial count, regression relationships are usually specified on the logit-transformed prevalence (log-odds) scale; see Table 1.3 in Section Section 1.5. To explore regression relationships in the case of prevalence data, it is convenient to use the so-called empirical logit in place of the empirical prevalence. The empirical logit is defined as

\[ l_{i} = \log\left\{\frac{y_i + 1/2}{n_i - y_i + 1/2}\right\} \tag{3.1}\]

where \(y_i\) are the number of individuals who tested positive for river-blindness and \(n_i\) is the total number of people tested at a location. The reason for using the empirical logit, rather than the standard logit transformation applied directly to the empirical prevalence, is that it delivers finite values for empirical prevalence values of 0 and 1, for which the standard logit transformation is not defined.

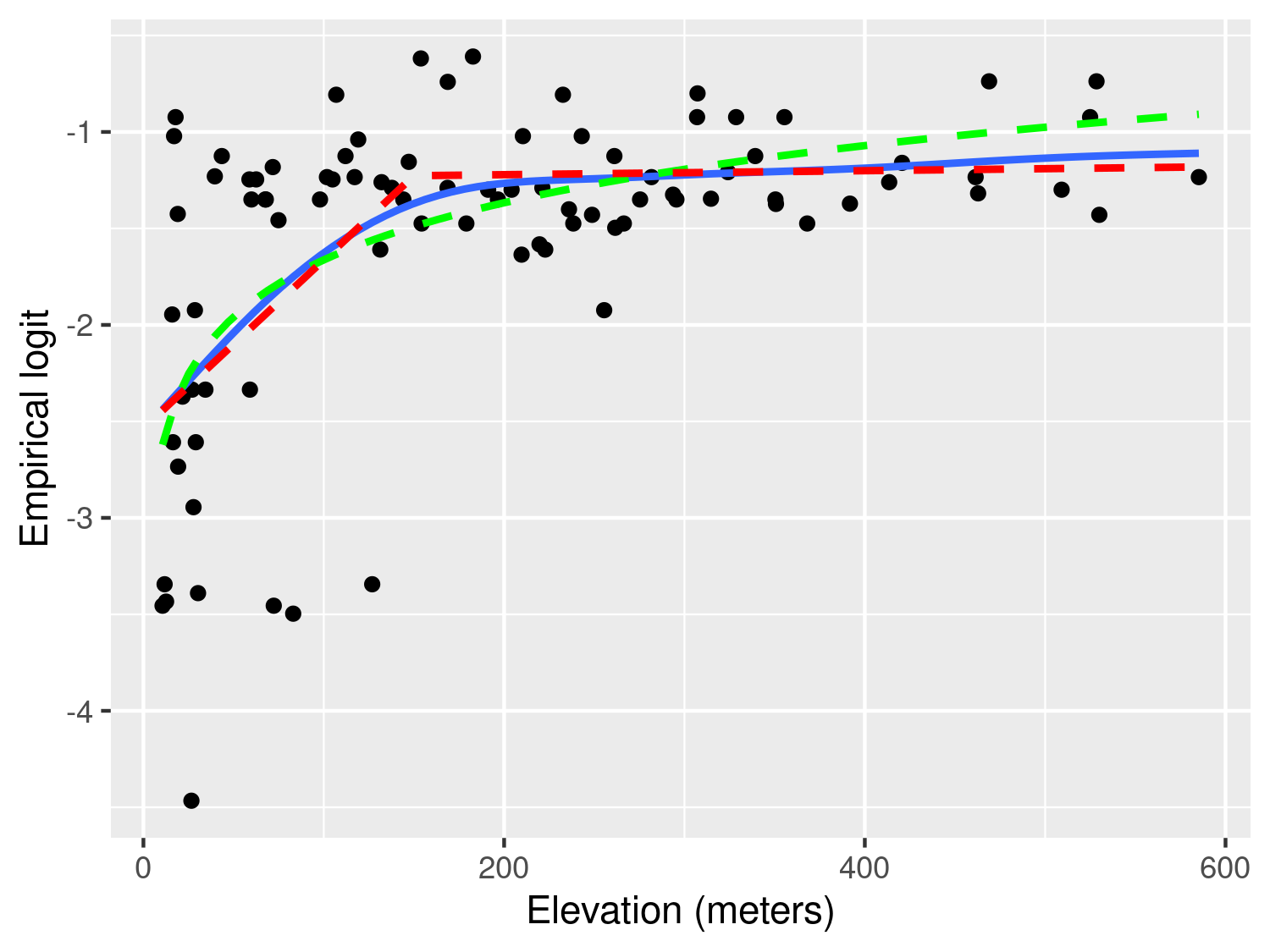

# The empirical logit

liberia$elogit <- log((liberia$npos+0.5)/

(liberia$ntest-liberia$npos+0.5))

ggplot(liberia, aes(x = elevation, y = elogit)) + geom_point() +

# Adding a smoothing spline

labs(x="Elevation (meters)",y="Empirical logit") +

stat_smooth(method = "gam", formula = y ~ s(x),se=FALSE)+

# Adding linear regression fit with log-transformed elevation

stat_smooth(method = "lm", formula = y ~ log(x),

col="green",lty="dashed",se=FALSE) +

# Adding linear regression fit with change point in 150 meters

stat_smooth(method = "lm", formula = y ~ x + pmax(x-150, 0),

col="red",lty="dashed",se=FALSE)

Figure 3.2 shows the scatter plot of the empirical logit against elevation. In this plot, we have also added three lines though the stat_smooth from the ggplot2 package. Using this function, we first pass the term gam to method to add a penalized smoothing spline (Hastie, Tibshirani, and Friedman 2001), represented by the blue solid line. The smoothing spline allows us to better discern the type of relationship and how to best capture it using a standard regression approach. As we can see from Figure 3.2, the smoothing spline corroborates our initial observation of a positive relationship up to about 150 meters, followed by a plateau.

To capture this non-linear relationship, we can use the two following approaches. The first is based on a simple log-transformation of elevation and is represented in Figure 3.2 by the green line. If were to express this relationship using a standard Binomial regression model, this would take the form \[ \log\left\{\frac{p(x_i)}{1-p(x_i)}\right\} = \beta_0 + \beta_1 \log\{e(x_i)\} \tag{3.2}\] where \(p(x_i)\) and \(e(x_i)\) are the river-blindness prevalence and elevation at sampled location \(x_i\), respectively.

Alternatively, the non-linear effect of elevation on prevalence could be captured using a linear spline. Put in simple terms, we want to fit a linear regression model that allows for a change in slope above 150 meters. Formally, this is expressed in a Binomial regression model as \[

\log\left\{\frac{p(x_i)}{1-p(x_i)}\right\} = \beta_0 + \beta_1 e(x_i) + \beta_{2} \max\{e(x_i)-150, 0\}.

\tag{3.3}\] Based on the equation above, the effect of elevation below 150 meters is quantified by the parameter \(\beta_1\). Above 150 meters, instead, the effect of elevation becomes \(\beta_1 + \beta_2\). Note that the function pmax (and not the standard base function max) should be used in R when the computation of the maximum between a scalar value and each of the components of a numeric vector is required.

Before proceeding further, it is important to explain the differences between the use of the logarithmic transformation (Equation 3.2) and the linear spline (Equation 3.3). We observe that both curves provide a similar fit to the data, with larger differences observed for larger values in elevation, where the log-transformed elevation models yield larger values for the predicted prevalence. This also suggests that if we were to extrapolate the predictions beyond 600 meters in elevation the implied pattern by the model with the log-transformed elevation would predict an increasingly larger elevation, which is unrealistic, since the fly that transmits the disease cannot breed at those altitudes. The linear spline model instead would generate predictions that would be very similar to those observed between 150 and 600 meters. From this point of view, the linear spline model would thus have more scientific validity than the other model. However, which of the two approaches should be chosen to model the effect of elevation is a question that closely depends on the research question to be addressed.

If the interest of the study was in better understanding the association between elevation and prevalence, the linear spline model does not only provide a more credible explanation but also its regression parameters can be more easily interpreted. In fact, for a unit increase in elevation, the multiplicative change in the odds for river-blindness is \(\exp\{\beta_1\}\), if elevation is below 150 meters, and \(\exp\{\beta_1+\beta_2\}\), if elevation is above 150 meters. When instead we use the log-transformed elevation, the interpretation of \(\beta_1\) in Equation 3.2 is slightly more complicated, as it is based on the multiplicative increase in elevation by the same amount given by the base of the algorithm, which is about \(e \approx 2.718\). To avoid this, one could rescale the regression coefficient as, for example, \(\beta_1/\log_{2}(e)\) which would be interpreted as the multiplicative change in the odds for river-blindness for a doubling in elevation. However, a doubling in elevation is less meaningful when considering larger values of elevation.

The letter \(e\) stands for the so-called Euler’s number and represents the base of the natural logarithm. In the book, we write \(\log(\cdot)\) to mean the “natural logarithm of \(\cdot\)”.

When the goal of statistical analysis is instead in developing a predictive model for the outcome of interest, the explanatory power and interpretability of the model may be of less concern. For this reason, the model with the log-transformed model could be preferred over the model with the linear spline, if it is shown to yield more predictive power. We will come back to this point again in Chapter 4, where we will show how to assess and compare the predictive performance of different geostatistical models.

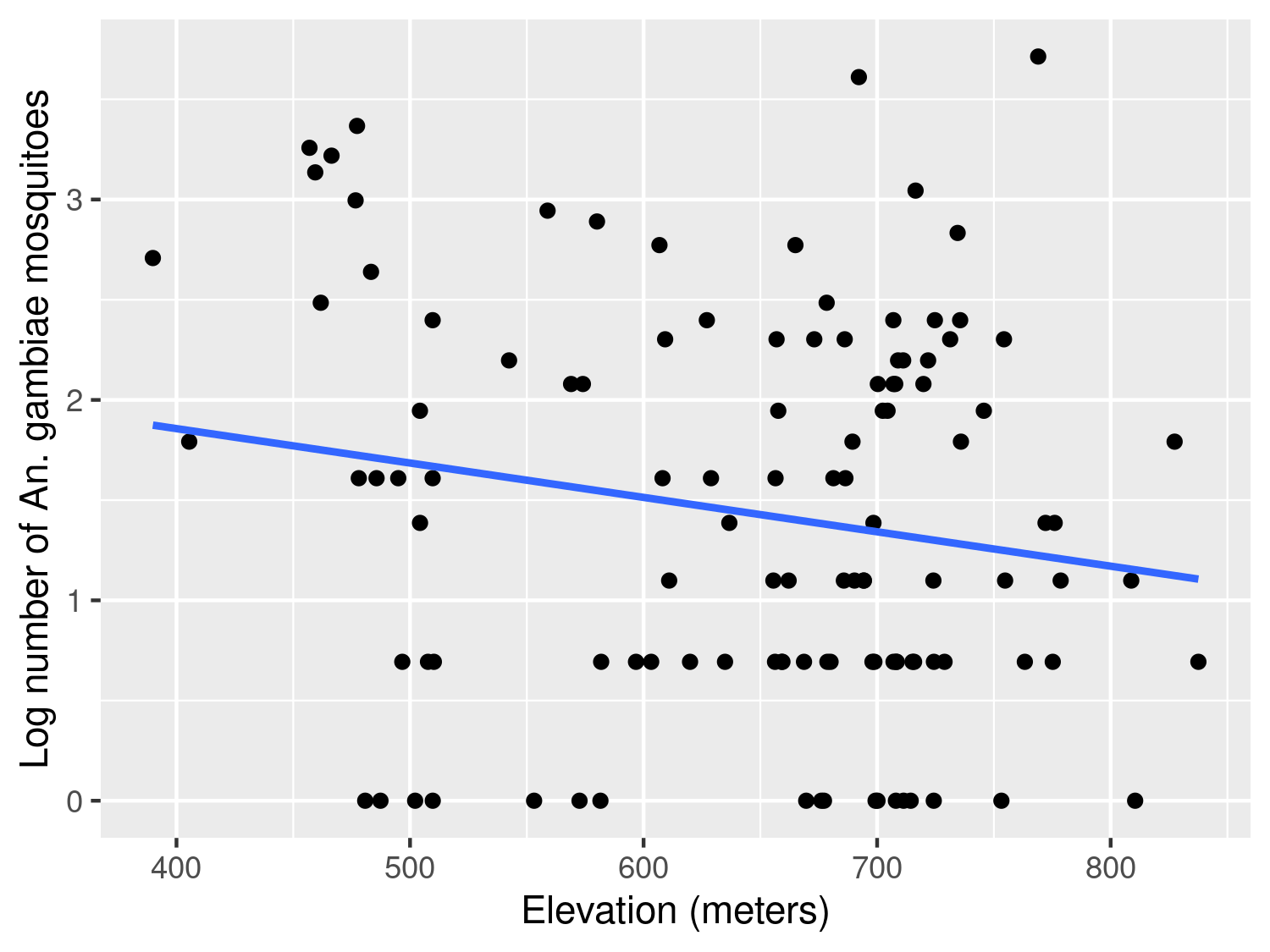

The other type of aggregated count data that we consider are unbounded counts. The Anopheles mosquitoes data-set (Section 1.4.4) is an example of this, since there is no upper limit to the number of mosquitoes that can be trapped at a location. Let us consider the covariate represented by elevation. In this case, the simplest model that can be used to analyse the data is a Poisson regression, where the linear predictor is defined as the log of the mean number of mosquitoes (Table 1.3). Hence, exploratory plots for the association with covariates should be generated using the log transformed counts of mosquitoes. In this instance, to avoid taking the log of zero, we can add 1 to the reported counts, if required. The variable of the An.gambiae in the anopheles data-set does not contain any 0, hence we simply apply the log transformation without adding 1.

anopheles$log_counts <- log(anopheles$An.gambiae)

ggplot(anopheles, aes(x = elevation, y = log_counts)) + geom_point() +

# Adding a smoothing spline

labs(x="Elevation (meters)",y="Log number of An. gambiae mosquitoes") +

stat_smooth(method = "lm", formula = y ~ x, se=FALSE)

The scatter plot in Figure 3.3 shows a weak negative association, with the average number of mosquitoes tending to decrease as elevation increases. In this case, assuming a linear effect of elevation on the log of the expected mosquito count appears reasonable.

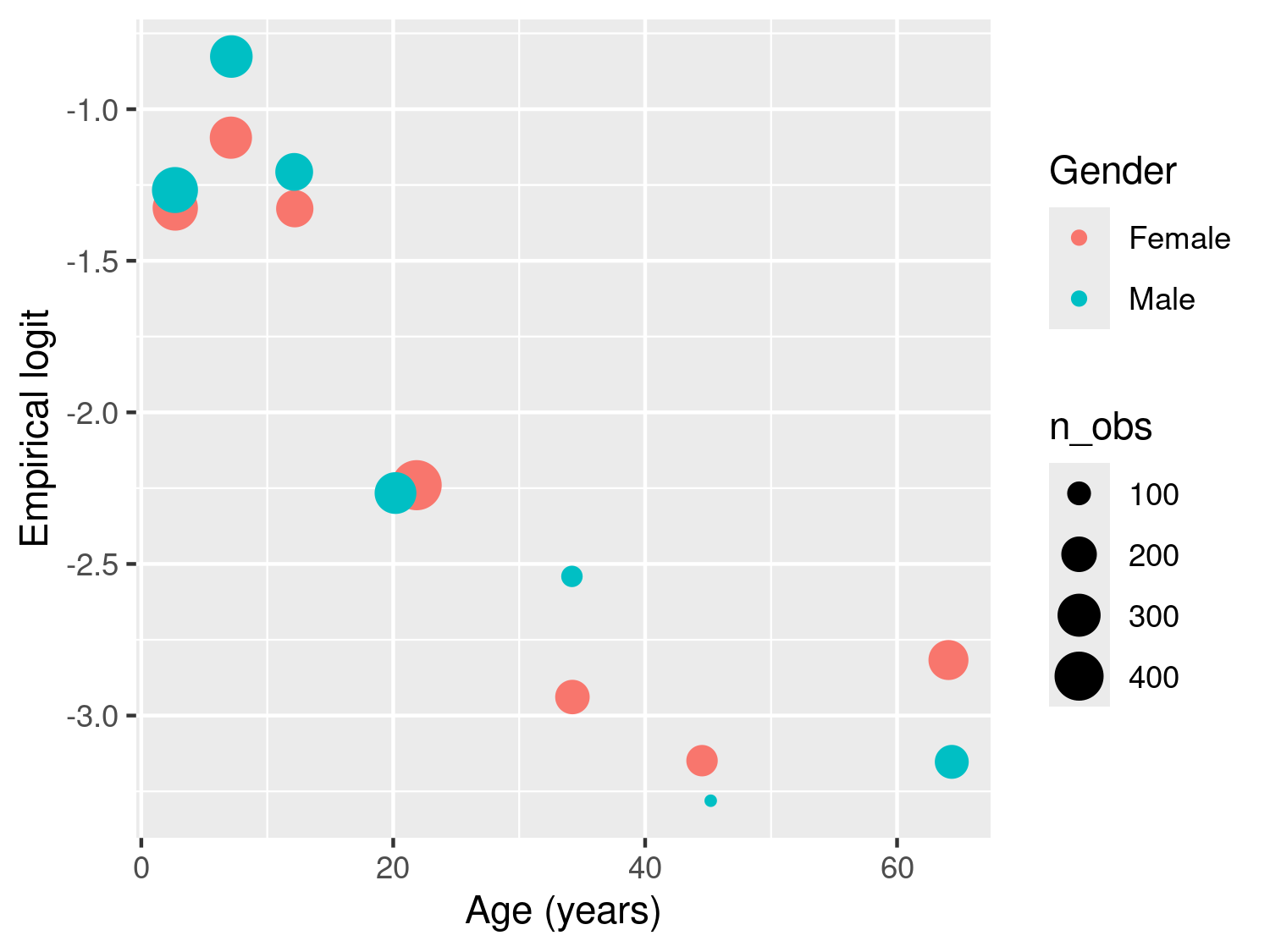

We now consider the malaria data from Kenya (Section 1.4.3) where the main outcome is the result from a rapid diagnostic test (RDT) for malaria from individuals within households. In this case, because the outcome only takes two values, 1 for a positive RDT test result and 0 otherwise, the direct application of the empirical logit from Equation 3.1 would not help us to generate informative scatter plots. Throughout the book, we will consider the data from the community survey only, hence we work with a subset of the data which we shall name malkenya_comm

malkenya_comm <- malkenya[malkenya$Survey=="community", ]To show how this issue can be overcome, let us consider the variables age and gender. To generate a plot that can help us understand between the relationship with malaria prevalence and the two risk factors, we proceed as follows.

Using the cut function, we first split age (in years) into classes through the argument breaks. The classification of age into \([0,5]\), \((5, 10]\) and \((10, 15]\) is common in many malaria epidemiology studies, as children are one of the groups at highest risk of malaria. The choice of the other classes of age reflects instead is made to balance the number of observations falling in each of the classes.

We then compute the empirical logit, using the total number of cases within age group and by gender. For a given age group and gender, which we denote as \(\mathcal{C}\), the empirical logit in Equation 3.1, now takes the form \[

l_{\mathcal{C}} = \log\left\{\frac{\sum_{i \in \mathcal{C}} y_{i} + 0.5}{|\mathcal{C}|- \sum_{i \in \mathcal{C}} y_{i} + 0.5} \right\}

\tag{3.4}\] where \(y_i\) are the individual binary outcomes and \(i\in \mathcal{C}\) is used to indicate that the sum is carried out over all the individuals who belong the class \(\mathcal{C}\), identified by a specific age group and gender. Finally, \(|\mathcal{C}|\) is the number of individuals who fall within \(\mathcal{C}\). In the code above, the empirical logit in Equation 3.4 is computed using the aggregate function. An inspection of the object age_class_data, a data frame, shows that the empirical logit is found in the column named RDT.

# Computation of the average age within each age group

age_class_data$age_mean_point <- aggregate(Age ~ Age_class + Gender,

data = malkenya_comm,

FUN = mean)$Age

# Number of individuals within each age group, by gender

age_class_data$n_obs <- aggregate(Age ~ Age_class + Gender,

data = malkenya_comm,

FUN = length)$AgeIn order to generate the scatter-plot, we compute the average age within each age group by gender, and use these as our values for the x-axis. Note that since we only need to obtain the average age from this output, we use $Age to extract this only and allocate to the column age_mean_point. Finally, we also compute the number of observations within each of the classes and place this in n_obs.

ggplot(age_class_data, aes(x = age_mean_point, y = RDT,

size = n_obs,

colour = Gender)) +

geom_point() +

labs(x="Age (years)",y="Empirical logit")

The resulting plot in Figure 3.4 shows the empirical logit against age by gender, with the size of each of the points proportional to the number of observations falling within each class. The observed patterns are explained by the fact that young children, especially those under the age of five, are particularly vulnerable to severe malaria infections. This is primarily due to their immature immune systems and lack of acquired immunity. As individuals grow older, they generally develop partial immunity to malaria through repeated exposure to the disease. This acquired immunity can provide some level of protection against severe malaria. At the same time, gender roles and activities can influence exposure to malaria-carrying mosquitoes. For example, men may spend more time outdoors for work or other activities, increasing their exposure to mosquito bites and thus their risk of infection. In addition, there are also biological factors to consider. Hormonal and genetic differences between males and females may also contribute to variations in immune responses to malaria infection. The interaction between age and gender is complex and may vary depending on the specific context and population being studied. A 2020 report from the Bill & Melinda Gates foundation provides a detailed overview of this and other aspects related to gender and malaria (Katz and Bill & Melinda Gates Foundation 2020).

To account for age in a model for malaria prevalence, several approaches are possible, some of which have been developed using biological models (Smith et al. 2007). To model the patterns observed in Figure 3.4, we can follow the same approach used in the previous section to model the relationship between elevation and river-blindness prevalence. First, let us consider age without the effect of gender. Let \(p_{j}(x_i)\) denote the probability of a positive RDT for the \(j\)-th individual living in a household at location \(x_i\). Assuming that malaria risk reaches its peak at 15 years of age, we can capture the non-linear relationship using a linear spline with two knots, one at 15 years and a second one at 40 years. This is expressed as \[ \begin{aligned} \log\left\{\frac{p_{j}(x_i)}{1-p_j(x_i)}\right\} = \beta_{0} + \beta_{1}a_{ij}+\beta_{2} \times\max\{a_{ij}-15, 0\} + \beta_{3}\max\{a_{ij}-40, 0\} \end{aligned} \tag{3.5}\] where \(a_{ij}\) is the age, in years, for the \(j\)-th individual at household \(i\). Based on this model the effect of age on RDT prevalence is \(\beta_{1}\), for \(a_{ij} < 15\), \(\beta_{1}+\beta_{2}\), for \(15 < a_{ij} < 40\), and \(\beta_{1}+\beta_{2}+\beta_{3}\) for \(a_{ij} > 40\).

Figure 3.4 suggests a potential interaction between age and gender, as the difference in RDT prevalence between males and females appears to vary with age, with wider gaps observed among individuals older than 20 years. To examine this pattern using a standard Binomial regression model, the linear predictor for RDT prevalence can be expressed as \[ \begin{aligned} \log\left\{\frac{p_{j}(x_i)}{1-p_j(x_i)}\right\} = \beta_{0} + (\beta_{1} + \beta_{1}^*g_{ij})\times a_{ij}+(\beta_{2} + \beta_{2}^*g_{ij})\times\max\{a_{ij}-15, 0\} + \\ (\beta_{3} + \beta_{3}^*g_{ij}) \times \max\{a_{ij}-40, 0\} \end{aligned} \tag{3.6}\] where \(g_{ij}\) is the indicator for gender, with 1 corresponding to male and 0 to female. The coefficients \(\beta_{1}^*\), \(\beta_{2}^*\) and \(\beta_{3}^*\) thus quantify the differences in risk between the two genders for ages below 15 years, between 15 and 40 years, and above 40 years, respectively. If all of those coefficients were 0, the model in Equation 3.5 would be recovered.

glm_age_gender_interaction <- glm(RDT ~ Age + Gender:Age +

pmax(Age-15, 0) + Gender:pmax(Age-15, 0) +

pmax(Age-40, 0) + Gender:pmax(Age-40, 0),

data = malkenya_comm, family = binomial)

summary(glm_age_gender_interaction)

##

## Call:

## glm(formula = RDT ~ Age + Gender:Age + pmax(Age - 15, 0) + Gender:pmax(Age -

## 15, 0) + pmax(Age - 40, 0) + Gender:pmax(Age - 40, 0), family = binomial,

## data = malkenya_comm)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -1.05835 0.10245 -10.331 < 2e-16 ***

## Age -0.03384 0.01310 -2.584 0.00978 **

## pmax(Age - 15, 0) -0.03975 0.02356 -1.687 0.09162 .

## pmax(Age - 40, 0) 0.09170 0.02482 3.695 0.00022 ***

## Age:GenderMale 0.01428 0.01221 1.170 0.24202

## GenderMale:pmax(Age - 15, 0) -0.03625 0.03145 -1.153 0.24908

## GenderMale:pmax(Age - 40, 0) 0.02451 0.04320 0.567 0.57052

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 2875.8 on 3351 degrees of freedom

## Residual deviance: 2673.8 on 3345 degrees of freedom

## AIC: 2687.8

##

## Number of Fisher Scoring iterations: 5The code above shows how to fit the model specified in Equation 3.6. The terms Age, pmax(Age-15, 0) and pmax(Age-40, 0) respectively correspond to \(\beta_{1}\), \(\beta_{2}\) and \(\beta_{3}\), whilst the Gender:Age, Gender:pmax(Age-15, 0) and Gender:pmax(Age-40, 0) to \(\beta_{1}^*\), \(\beta_{2}^*\) and \(\beta_{3}^*\), respectively. In the summary of the fitted model, we observe that the interaction coefficients are non-statistically significant. However, removing the interaction based on the fact that each of the coefficients have each p-values larger than the conventional level of 5% would be wrong. Instead we should carry out the likelihood ratio test, as shown below.

glm_age_gender_no_interaction <- glm(RDT ~ Age + pmax(Age-15, 0) + pmax(Age-40, 0),

data = malkenya_comm, family = binomial)

anova(glm_age_gender_no_interaction, glm_age_gender_interaction, test = "Chisq")To carry out the likelihood ratio test to assess the null hypothesis that \(\beta_{1}^*=\beta_{2}^*=\beta_{3}^*=0\), we first fit the simplified nested model under this null hypothesis. The likelihood ratio test can then be carried out using the anova command as shown. The p-value indicates that we do not find evidence against the null hypothesis, hence in our analysis of the data we might favour the simplified model that does not assume an interaction between the two genders. However, it is important to note that the likelihood used here comes from a standard Binomial model that does not account for residual spatial correlation. As will be shown later in the book, ignoring such correlation typically leads to underestimated standard errors and, consequently, p-values that are smaller than they should be. The resulting p-values from the likelihood ratio test may therefore be unreliable. Nonetheless, these exploratory analyses remain useful for identifying patterns that may or may not persist once a more flexible model is fitted. In practice, if an interaction appears weak or non-significant at this stage, one would expect even less evidence for it under a model that captures additional variability. Conversely, when certain relationships are scientifically plausible - such as age-specific differences between genders - it may still be appropriate to retain them regardless of their statistical significance.

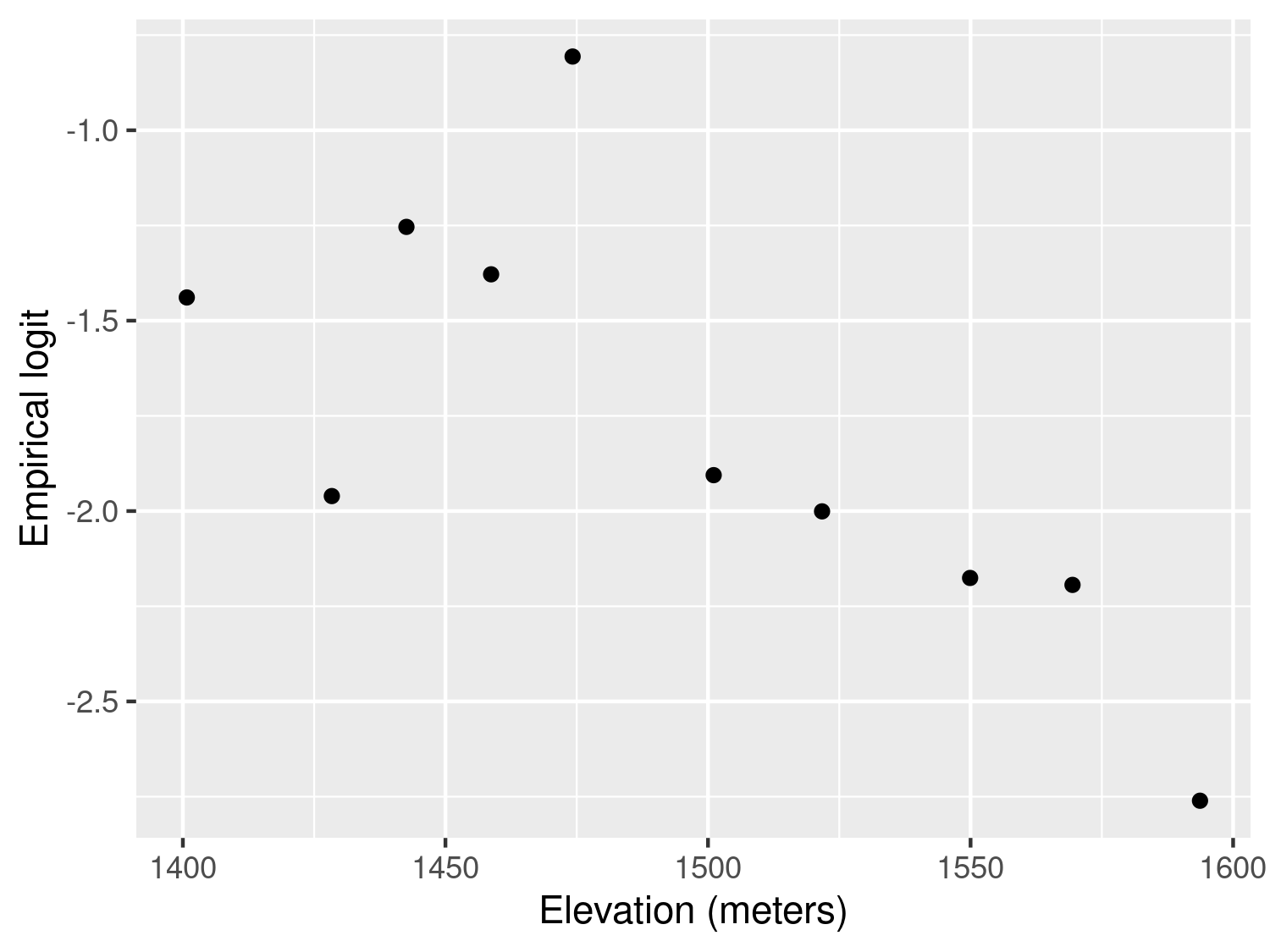

The approach just illustrated, can also be applied to explore the association with other continuous variables that are a property of the household and not of the individual. Let us, for example, consider the variable elevation from the malkenya data-set.

Following the same approach used for age, we first split elevation into classes. To define these, we use the deciles of the empirical distribution of elevation which we calculate using the quantile function above. In this way we also ensure that the number of observations falling within each class of elevation is approximately the same.

# Computation of the empirical logit by classes of elevation

elev_class_data <- aggregate(RDT ~ elevation_class,

data = malkenya_comm,

FUN = function(y)

log((sum(y)+0.5)/(length(y)-sum(y)+0.5)))

# Computation of the average elevation within each class of elevation

elev_class_data$elevation_mean <- aggregate(elevation ~ elevation_class,

data = malkenya_comm,

FUN = mean)$elevationWe then compute the empirical logit and the average elevation for each class of elevation. The empirical logit is computed as already defined in Equation 3.4, where now the definition of \(\mathcal{C}\) is given by a specific decile used to split the distribution of elevation.

ggplot(elev_class_data, aes(x = elevation_mean, y = RDT),

size = n_obs) +

geom_point() +

labs(x="Elevation (meters)",y="Empirical logit")

The resulting plot in Figure 3.5 shows an approximately linear relationship with decreasing values of the empirical logit for increasing elevation. This is expected because the cooler environment at higher altitudes is less favourable to the development of the overall mosquito life cycle.

An alternative approach to generate a scatter plot for assessing the association between elevation and the empirical logit would be to aggregate the data at household level, rather than using classes of elevation. However, this approach does not work as the one illustrated above when only one individual is sampled for each household. In the case of the malkenya data, the great majority of the households only include one individual making this second approach less useful than the one illustrated.

Other more sophisticated approaches for the exploration of the associations between covariates and binary outcomes are available. For example, the use of the empirical logit could be avoided by using non-parametric regression methods for Binomial outcomes (Bowman 1997), also implemented in sm package in R. Our view is that a careful exploratory analysis based on simpler methods, such as those illustrated above, can be equally effective to inform the model formulation.

One of the main advantages in the use of covariates is the ability to attribute part of the variation of the outcome to a set of measured variables and, hence, reduce the uncertainty of our inferences. However, it is almost always the case that the finite number of covariates at our disposal is not enough to fully explain the variation in the outcome. The existence of unmeasured covariates that are related to the modelled outcome give rise to the so-called residual variation. In a standard linear regression model the extent to which we are able to account for important covariates is directly linked to the size of the variance of the residuals. In the case of count data, instead, this link is less well defined and one of the main consequences of the omission of covariates, which we address in this chapter, is overdispersion.

Overdispersion occurs when the variability of the data is larger than that implied by the generalized linear model (GLM) fitted to them. For example, if we consider the Binomial distribution, the presence of overdispersion implies that \(V(Y_i) > n_i \mu_{i}(1-\mu_i)\), where we recall that \(n_i\) is the Binomial denominator and \(mu_i\) is the probability of “success” for each of the \(n_i\) Bernoulli trials; for a Poisson distribution with \(E(Y_i) = \mu_i\), instead, overdispersion implies that \(V(Y_i) > \mu_{i}\).

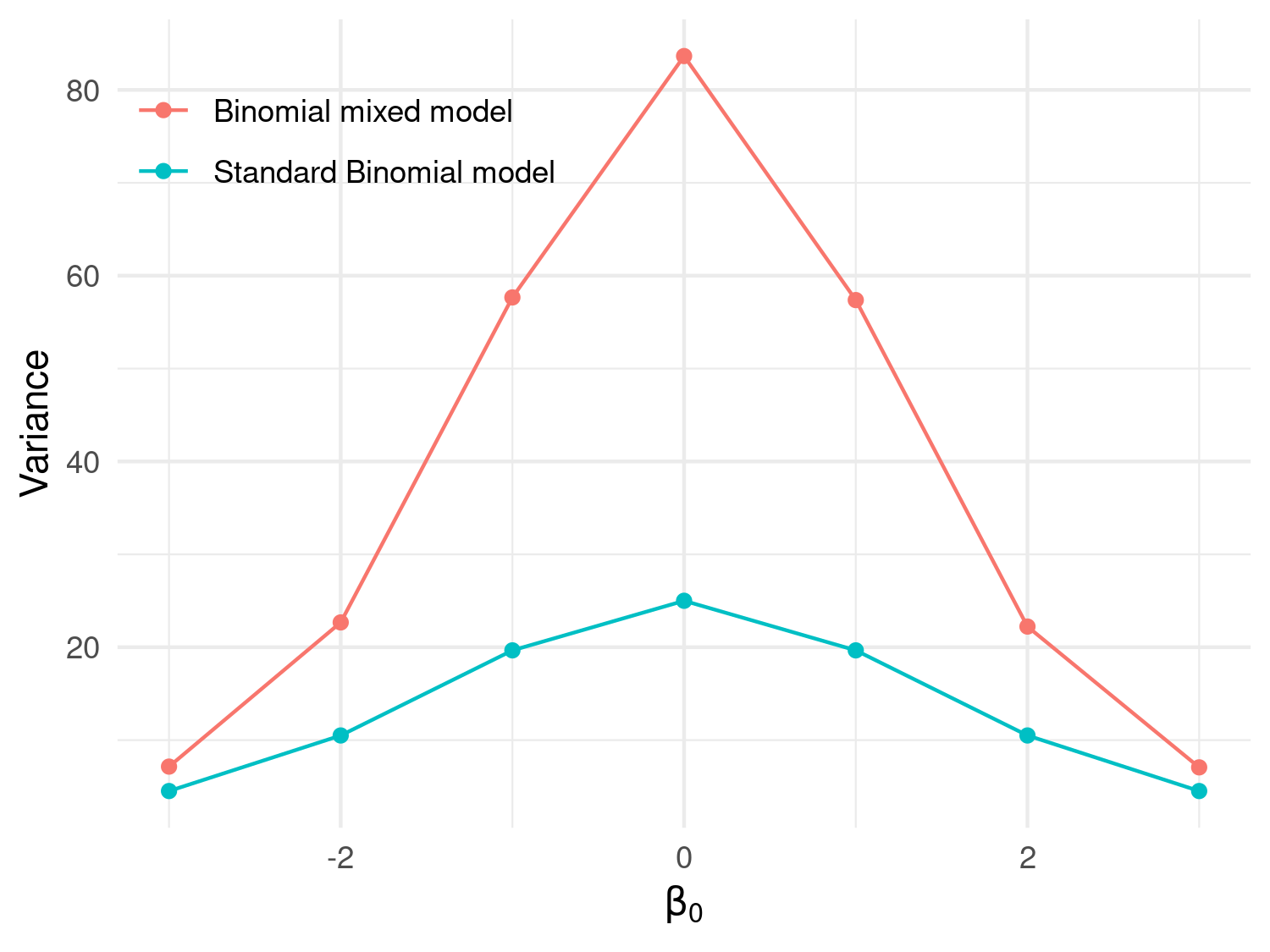

Assessment of the overdispersion for count data can be carried out in different ways depending on the goal of the statistical analysis. Since the focus of this book is to illustrate how to formulate and apply geostatistical models, the most natural approach to assess overdispersion is through the use of generalized linear mixed models (GLMMs). The class of GLMMs that we consider in this and the next section are obtained by replacing the spatial Gaussian process \(S(x_i)\) in introduced in Equation 1.4 with a set of mutually independent random effects, which we denote as \(Z_i\), and thus write \[ g(\mu_i) = d(x_i)^\top \beta + Z_i. \tag{3.7}\] The model above accounts for the overdispersion in the data through \(Z_i\) whose variance can be interpreted as an indicator of the amount of overdispersion. To show this, we carry out a small simulation as follows. For simplicity, we consider the Binomial mixed model with an intercept only, hence \[ \log\left\{\frac{\mu_i}{1-\mu_i}\right\} = \beta_0 + Z_i \tag{3.8}\] and assume that the \(Z_i\) follow a set of mutually independent Gaussian variables with mean 0 and variance \(\tau^2\). In our simulation we vary \(\beta_0\) over the set \(\{-3, -2, -1, 0, 1, 2, 3\}\) and set \(\tau^2=0.1\) and the binomial denominators to \(n_i = 100\). For a given value of \(\beta_0\), we then proceed through the following iterative steps.

Simulate 10,000 values for \(Z_i\) from a Gaussian distribution with mean 0 and variance \(\tau^2\).

Compute the probabilities \(\mu_i\) based on Equation 3.8.

Simulate 10,000 values from a Binomial model with probability of success \(\mu_i\) and denominator \(n_i\).

Compute the empirical variance of the counts \(y_i\) simulated in the previous step.

Change the value of \(\beta_0\) and repeat the previous steps, for all the values of \(\beta_0\).

The code below shows the implementation of the above steps in R.

# Number of simulations

n_sim <- 10000

# Variance of the Z_i

tau2 <- 0.1

# Binomial denominator

bin_denom <- 100

# Intercept values

beta0 <- c(-3, -2, -1, 0, 1, 2, 3)

# Vector where we store the computed variance from

# the simulated counts from the Binomial mixed model

var_data <- rep(NA, length(beta0))

for(j in 1:length(beta0)) {

# Simulation of the random effects Z_i

Z_i_sim <- rnorm(n_sim, sd = sqrt(tau2))

# Linear predictor of the Binomial mixed model

lp <- beta0[j] + Z_i_sim

# Probabilities of the Binomial distribution conditional on Z_i

prob_sim <- exp(lp)/(1+exp(lp))

# Simulation of the counts from the Binomial mixed model

y_i_sim <- rbinom(n_sim, size = bin_denom, prob = prob_sim)

# Empirical variance from the simulated counts

var_data[j] <- var(y_i_sim)

}

# Probabilities from the standard Binomial model (Z_i = 0)

probs_binomial <- exp(beta0)/(1+exp(beta0))

# Variance from the standard Binomial model

var_bimomial <- bin_denom*probs_binomial*(1-probs_binomial)plot_df <- data.frame(

beta0 = beta0,

`Binomial mixed model` = var_data,

`Standard Binomial model`= if (exists("var_binomial")) var_binomial else var_bimomial

)

df_long <- tidyr::pivot_longer(

plot_df,

cols = c(Binomial.mixed.model, Standard.Binomial.model),

names_to = "Model",

values_to = "Variance"

)

# Pretty legend labels

df_long$Model <- factor(

df_long$Model,

levels = c("Binomial.mixed.model", "Standard.Binomial.model"),

labels = c("Binomial mixed model", "Standard Binomial model")

)

ggplot(df_long, aes(x = beta0, y = Variance, color = Model)) +

geom_point() +

geom_line() +

labs(x = expression(beta[0]), y = "Variance") +

theme_minimal() +

theme(legend.title = element_blank(),

legend.position = c(0.2, 0.85))

Figure 3.6 shows the results of the simulation. In this figure, the red line corresponds to the variance of a standard Binomial model, obtained by setting \(Z_i=0\) and computed as \(n_i \mu_i (1-\mu_i)\) with \(\mu_i = \exp\{\beta_0\}/(1+\exp\{\beta_0\})\). As expected, this plot shows that the variance of the simulated counts from the mixed model in Equation 3.8 exhibit a larger variance than would be expected under the standard Binomial model. It also indicates that the chosen value for the variance of \(Z_i\) of \(\tau^2 = 0.1\) corresponds to a significant amount of dispersion. One way to relate \(\tau^2\) to the amount of overdispersion is by considering that, following from the properties of a univariate Gaussian distribution, a priori the \(Z_i\) will take values between \(-1.96 \sqrt{\tau^2}\) and \(+1.96 \sqrt{\tau^2}\) with approximately 95\(\%\) probability. That implies that \(\exp\{Z_i\}\), which expresses the effect of the random effects on the odds ratios, will be with 95\(\%\) probability between \(\exp\{-1.96 \sqrt{\tau^2}\}\) and \(\exp\{+1.96 \sqrt{\tau^2}\}\). By replacing \(\tau^2\) with the chosen values for the simulation, those two become about 0.54 and 1.86, meaning that with the \(Z_i\) with \(95\%\) probability will have a multiplicative effect on the odds ratios between \(0.54\) and \(1.86\).

We encourage you to do Exercise 1 and Exercise 2 at the end of this chapter, to further explore how generalized linear mixed models can be used as a tool to account for overdispersion.

We now illustrate how to fit a generalized linear mixed, using the anopheles data-set as an example. We consider two models: an intercept-only model and one that uses elevation as a covariate. Let \(\mu(x_i)\) be the number of mosquitoes captured at a location \(x_i\); then the linear predictor with elevation as a covariate takes the form \[

\log\{\mu_i\} = \beta_{0} + \beta_{1} d(x_i) + Z_i

\tag{3.9}\] where \(d(x_i)\) indicates the elevation in meters at location \(x_i\) and the \(Z_i\) are independent and identically distributed Gaussian variables with mean 0 and variance \(\tau^2\). The model with an intercept only is simply obtained by setting \(\beta_1 = 0\).

We carry out the estimation in R using the glmer function from the lme4 package (see Bates et al. (2015) for a detailed tutorial). The glmer function implements the maximum likelihood estimation for generalized linear mixed models. The code below shows how the glmer is used to carry out this step for the model in Equation 3.9 and the one without covariates.

# Create the ID of the location

anopheles$ID_loc <- 1:nrow(anopheles)

# Poisson mixed model with elevation

fit_glmer_elev <- glmer(An.gambiae ~ scale(elevation) + (1|ID_loc), family = poisson,

data = anopheles, nAGQ = 25)

# Poisson mixed model with intercept only

fit_glmer_int <- glmer(An.gambiae ~ (1|ID_loc), family = poisson,

data = anopheles, nAGQ = 25)To fit the model with glmer, we first must create a variable in our data-set that allows us to identify the location associated with each count. In this case, since every row corresponds to a different location, we simply use the row number to identify the locations and save this in the ID_loc variable. The random effects \(Z_i\) are then included in the model by adding (1 | ID_loc) in the formula of the glmer function.

When introducing the variable elevation, we standardize it so that its mean is 0 and its variance is 1. This helps the model-fitting algorithm converge more reliably and is generally good practice when covariates have very different scales. Importantly, standardizing a variable does not change the fit of the model to the data—it only changes how we interpret the regression coefficients. In other words, the model is mathematically equivalent; only the units of measurement differ.

For example, suppose we model mosquito counts using elevation measured in metres and obtain a regression coefficient of –0.002. This means that for every one-metre increase in elevation, the expected mosquito count decreases by about 0.2%. If we instead standardize elevation so that one unit represents one standard deviation (say 150 m), the corresponding coefficient becomes –0.002 × 150 = –0.30. Both models yield identical fitted values and predictions. The only difference is that in the standardized model, the coefficient refers to a one-standard-deviation change in elevation rather than a one-metre change.

The argument nAGQ is used to define the precision of the approximation of the maximum likelihood estimation algorithm. By default nAGQ = 1, which corresponds to the Laplace approximation. Values for nAGQ larger than 1 are used to define the number of points of the adaptive Gaussian-Hermite quadrature. The general principle is that the larger nAGQ the better, but at the expense of an increased computing time. Based on the guidelines and help pages of the lme4 package, it is stated that a reasonable value for nAGQ is 25. For more technical details on this aspect, we refer the reader to Bates et al. (2015).

We can now look at the summary of the fitted models to the mosquitoes data-set.

### Summary of the model with elevation

summary(fit_glmer_elev)

## Generalized linear mixed model fit by maximum likelihood (Adaptive

## Gauss-Hermite Quadrature, nAGQ = 25) [glmerMod]

## Family: poisson ( log )

## Formula: An.gambiae ~ scale(elevation) + (1 | ID_loc)

## Data: anopheles

##

## AIC BIC logLik -2*log(L) df.resid

## 291.8 300.1 -142.9 285.8 113

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -0.89574 -0.42469 -0.09483 0.29445 0.53352

##

## Random effects:

## Groups Name Variance Std.Dev.

## ID_loc (Intercept) 0.7146 0.8453

## Number of obs: 116, groups: ID_loc, 116

##

## Fixed effects:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.53042 0.09365 16.342 <2e-16 ***

## scale(elevation) -0.19794 0.08950 -2.212 0.027 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## scale(lvtn) 0.036

### Summary of the model with the intercept only

summary(fit_glmer_int)

## Generalized linear mixed model fit by maximum likelihood (Adaptive

## Gauss-Hermite Quadrature, nAGQ = 25) [glmerMod]

## Family: poisson ( log )

## Formula: An.gambiae ~ (1 | ID_loc)

## Data: anopheles

##

## AIC BIC logLik -2*log(L) df.resid

## 294.6 300.1 -145.3 290.6 114

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -0.73816 -0.42718 -0.06941 0.26564 0.45022

##

## Random effects:

## Groups Name Variance Std.Dev.

## ID_loc (Intercept) 0.761 0.8724

## Number of obs: 116, groups: ID_loc, 116

##

## Fixed effects:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.52849 0.09584 15.95 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1From the summary of the model that uses elevation, we observe that the estimated regression coefficient \(\beta_{1}\) is statistically significantly different from 0. The interpretation of the estimated regression coefficient is the following: for an increase of about 100 meters in elevation, all other things being equal, the average number of mosquitoes decreases by about \(100\% \times [1-\exp\{-0.19794\}] \approx 18\%\). Note that when using a standardized variable, a unit increase for this corresponds to an increase in the original unstandardized variable equal to its standard deviation, which for the elevation variable is about 100 meters.

From the summaries of the two models, under Random effects:, we obtain the estimates associated with the random effects introduced in the model. In this case, since we only have introduced \(Z_i\), this part of the summary provides the maximum likelihood estimate for \(\tau^2\), the variance of \(Z_i\), which is found on the line where ID_loc is printed. We then observe that the estimates for \(\tau^2\) for the intercept-only model is 0.761, whilst for the model with elevation this is 0.7146. Note that the figures reported under Std.Dev. are simply the square root of the value reported under Variance. As expected, the introduction of elevation contributes to the explanation of the residual variation captured by \(Z_i\), though by a very small amount. The estimated values of \(\tau^2\) thus suggest that there is extra-Binomial variation in the data that is not accounted for by elevation.

In the next section, we will illustrate how to assess the presence of residual correlation for continuous measurements and overdispersed count data.

In its most basic form, the concept of spatial correlation can be succinctly encapsulated by Tobler (1970) first law of geography, which posits that “everything is interconnected, but objects in close proximity exhibit stronger relationships than those situated farther apart.” After we have identified the key variables to introduce as covariates in the model (Section 3.1.1) and, in the case of count data, assessed the presence of overdispersion (Section 3.1.2), our final exploratory step consists of assessing whether the residuals of the non spatial model show evidence of spatial correlation. Hence, in geostatistical modelling, the interest is not in the spatial correlation of the data, but rather in understanding whether the variation in the outcome unexplained by the covariates exhibits spatial correlation. We call this residual spatial correlation, to emphasize that spatial correlation is a concept relative to the covariates that we have introduced in the model.

In the context of geostatistical analysis, the tool that is generally used to assess the residual spatial correlation is the so-called empirical variogram. Before looking at the mathematical definition of the empirical variogram, let us consider a generalized linear mixed model as expressed in Equation 3.7. Our goal is then to question the assumption of independently distributed random effects \(Z_i\) by asking whether the \(Z_i\) show evidence of spatial correlation. Let \(Z_i\) and \(Z_j\) be two random effects that are associated with two different locations \(x_i\) and \(x_j\), respectively, and let us take the squared difference between the two \[ V_{ij} = (Z_i - Z_j)^2. \tag{3.10}\] How does the spatial correlation affect the value of \(V_{ij}\)? To answer this question, we can refer to the aforementioned Tobler’s law of geography. When \(x_i\) and \(x_j\) will be closer to each other, then \(Z_i\) and \(Z_j\) will also tend to be more similar to each other, thus making \(V_{ij}\) smaller, on average. On the contrary, when \(x_i\) and \(x_j\) will be further apart, then \(V_{ij}\) will become larger, on average. We can then construct the empirical variogram by considering all possible pairs of locations \(x_i\) and \(x_j\), for which we then compute \(V_{ij}\) and plot this against the distance between \(x_i\) and \(x_j\), which we denote as \(u_{ij}\). If there is spatial correlation in the random effects \(Z_i\), then this will manifest as an average increase in the \(V_{ij}\) as \(u_{ij}\) increases. However, there are still two issues that we have to address before we can generate and plot the empirical variogram.

The first issue is that we do not observe \(Z_i\) as, by definition, this is a latent variable. Hence, we require an estimate for \(Z_i\) which we can then feed into \(V_{ij}\). To emphasize this point, from now on, we shall replace Equation 3.10 with \[

\hat{V}_{ij} = (\hat{Z}_{i} - \hat{Z}_j)^2.

\tag{3.11}\] Several options are available for estimating \(Z_{i}\). Our choice is to use the model of the predictive distribution of \(Z_i\), that is the distribution of \(Z_{i}\) conditioned to the data \(y_i\). This estimator for \(Z_i\) is also readily available from the output of the lmer and glmer functions of the lme4 package, as we will illustrate later in our example in this section.

The second issue is that if we simply plot \(\hat{V}_{ij}\) against the distances \(u_{ij}\) (also known as cloud variogram), due to the high noisiness in the \(\hat{V}_{ij}\), it may be quite difficult to assess the presence of an increasing trend in the \(\hat{V}_{ij}\) and thus detect spatial correlation. Hence, it is general practice to group the distances \(u_{ij}\) into classes, say \(\mathcal{U}\), and then take average of all the \(\hat{V}_{ij}\) that fall within \(\mathcal{U}\).

We can now write the formal definition of the empirical variogram as \[ \hat{V}(\mathcal{U}) = \frac{1}{2 |\mathcal{U}|} \sum_{(i, j): (u_i, u_j) \in \mathcal{U}} \hat{V}_{ij} \tag{3.12}\] where \(|\mathcal{U}|\) denotes the number of pairs of locations that fall within the distance class \(\mathcal{U}\). The rationale behind dividing by 2 in \(1/2 |\mathcal{U}|\) from the above equation, will be elucidated in Section 3.2. When creating the empirical variogram plot, we select the midpoint values of the distance classes \(\mathcal{U}\) to represent the x-axis values.

Before we can evaluate residual spatial correlation, there remains one crucial concern: relying solely on a visual inspection of the empirical variogram is susceptible to human subjectivity. Furthermore, it is worth noting that even a seemingly upward trend observed in the empirical variogram might be merely a product of random fluctuations, rather than a reliable indication of actual residual spatial correlation. To address these concerns and enhance the objectivity of the use of the empirical variogram, one approach would involve comparing the observed empirical variogram pattern with those generated in the absence of spatial correlation. Following this principle, we then use a permutation test that allows us to generate empirical variograms under the assumption of absence of spatial correlation through the following iterative steps.

Permute the order of the locations in the data-set while keeping everything else fixed.

Compute the empirical variogram \(\hat{V}(\mathcal{U})\) for the permuted data-set.

Repeat 1 and 2 a large number of times, say 10,000.

Use the resulting 10,000 empirical variograms to compute 95\(\%\) confidence intervals, by taking the 0.025 and 0.975 quantiles of these for each distance class \(\hat{V}(\mathcal{U})\).

If the observed empirical variogram lies entirely within the envelope generated in the previous step, we conclude that there is no evidence of residual spatial correlation in the data. When, instead, parts of the empirical variogram fall outside the envelope, this suggests the presence of residual spatial dependence.

In point 5 above, the extent and pattern of these deviations can vary considerably. In many cases, the departures from the envelope are quite subtle, with only a few points of the empirical variogram falling outside, as we will see in later examples. Such patterns may indicate a weak or complex form of spatial dependence that is difficult to recover from the data. Ultimately, only the fit of a geostatistical model can provide a definitive assessment of the extent of the spatial correlation. The empirical variogram should therefore be viewed as a diagnostic tool that helps flag potential issues, rather than as conclusive evidence on its own.

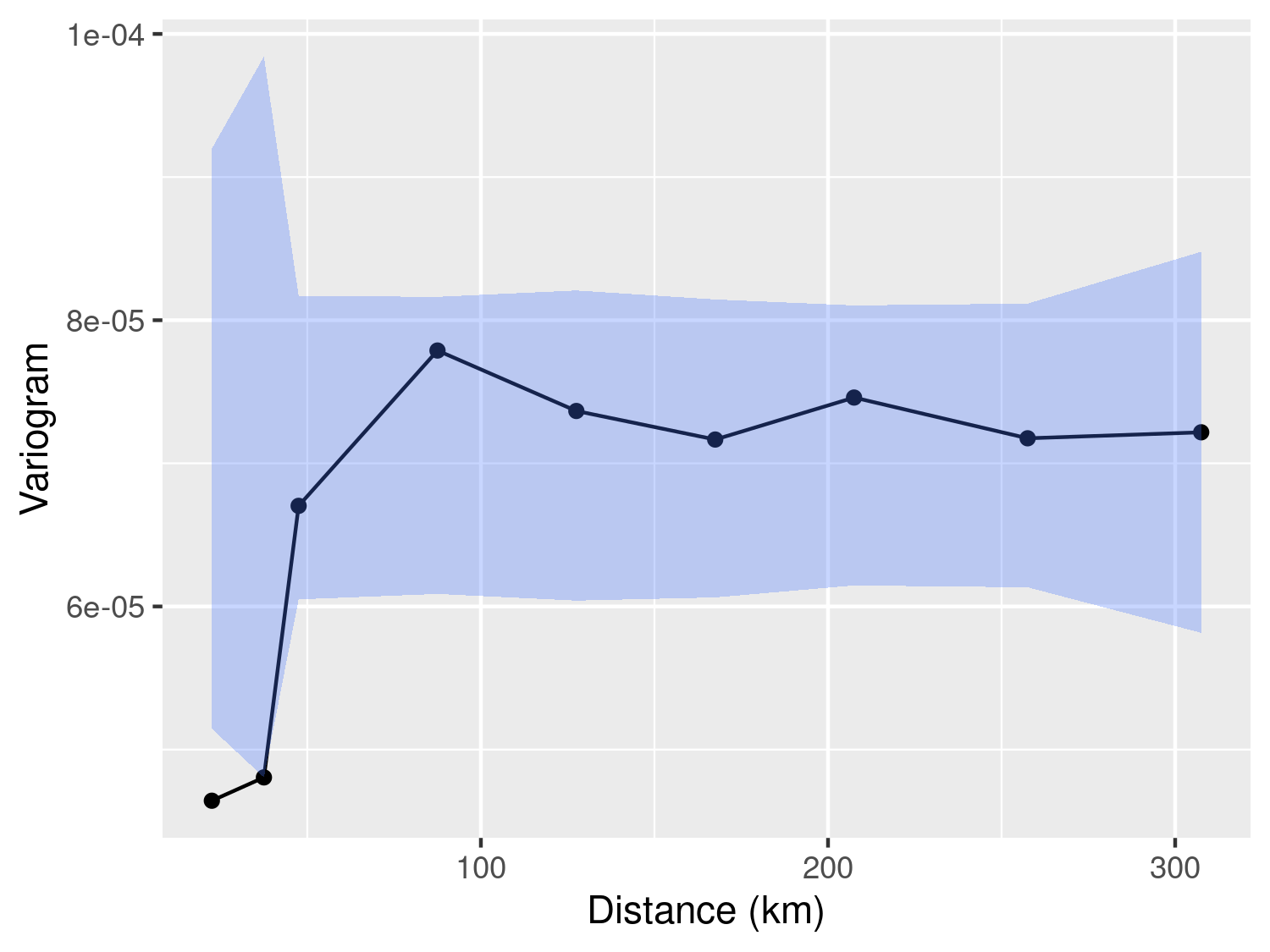

We now show an application of all the concepts introduced in this section to the Liberia data on river-blindness.

We consider the Binomial mixed model that uses the log-transformed elevation as a covariate to model river blindness prevalence, hence \[

\log\left\{\frac{p(x_i)}{1-p(x_i)}\right\} = \beta_{0} + \beta_{1}\log\{e(x_i)\} + Z_i

\tag{3.13}\] where \(e(x_i)\) is the elevation in meters at location \(x_i\) and the \(Z_i\) are i.i.d. Gaussian variables with mean 0 and variance \(\tau^2\). We first fit the model above using the glmer function.

# Convert the data-set into an sf object

liberia <- st_as_sf(liberia, coords = c("lat", "long"), crs = 4326)

# Create the ID of the location

liberia$ID_loc <- 1:nrow(liberia)

# Binomial mixed model with log-elevation

fit_glmer_lib <- glmer(cbind(npos, ntest) ~ log(elevation) + (1|ID_loc), family = binomial,

data = liberia, nAGQ = 25)

summary(fit_glmer_lib)Generalized linear mixed model fit by maximum likelihood (Adaptive

Gauss-Hermite Quadrature, nAGQ = 25) [glmerMod]

Family: binomial ( logit )

Formula: cbind(npos, ntest) ~ log(elevation) + (1 | ID_loc)

Data: liberia

AIC BIC logLik -2*log(L) df.resid

127.9 135.4 -61.0 121.9 87

Scaled residuals:

Min 1Q Median 3Q Max

-2.46033 -0.63341 -0.07633 0.61995 3.12732

Random effects:

Groups Name Variance Std.Dev.

ID_loc (Intercept) 0.003097 0.05565

Number of obs: 90, groups: ID_loc, 90

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -2.96292 0.21184 -13.987 < 2e-16 ***

log(elevation) 0.26143 0.04071 6.422 1.35e-10 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr)

log(elevtn) -0.981From the output, we observe that the estimate for \(\tau^2\) is about 0.003, indicating a moderate level of overdispersion in the data.

liberia$Z_hat <- ranef(fit_glmer_lib)$ID_loc[,1]Through the function ranef when extracting the estimates of the random effects \(Z_i\) and save these in the data set. We then use the function s_variogram from the RiskMap package to compute the empirical variogram for the estimated \(\hat{Z}_i\).

liberia_variog <- s_variogram(data = liberia,

variable = "Z_hat",

bins = c(15, 30, 40, 80, 120,

160, 200, 250, 300, 350),

scale_to_km = TRUE,

n_permutation = 200)Through the argument bins we can specify the classes of distance, previously denoted by \(\mathcal{U}\); check the help page of s_variogram to see how this is defined by default. The value passed to bins in the code above correspond to define the following classes of distance \(\mathcal{U}\): \([15, 30]\), \((30, 40]\) and so forth, with the last class being \([350, +\infty)\), i.e. all pairs of locations whose distances are above 350km. The argument n_permutation allows the user to specify the number of permutations that are performed to generate the envelope for absence of spatial correlation previously described.

dist_summaries(data = liberia,

scale_to_km = TRUE)

## $min

## [1] 3.204561

##

## $max

## [1] 514.9768

##

## $mean

## [1] 199.7156

##

## $median

## [1] 185.7845The dist_summaries function within the RiskMap package can be used for gauging the extent of the area covered by your dataset, aiding in the selection of appropriate values to be passed to the bins argument. In the provided output above, we can observe that for the Liberia dataset, the minimum and maximum distances are approximately 3km and 533km, respectively. While there is not a one-size-fits-all recommendation for setting bins, two fundamental principles should inform your decision-making. Firstly, it is advisable to avoid choosing overly large distance intervals, as the uncertainty associated with the empirical variogram tends to increase with distance due to fewer available pairs of observations for estimation. Secondly, especially when spatial correlation is not strong, it is crucial to carefully explore the behavior of the variogram at smaller distances. Consequently, it is generally advisable to experiment with different bins configurations and observe how they impact the pattern of the empirical variogram.

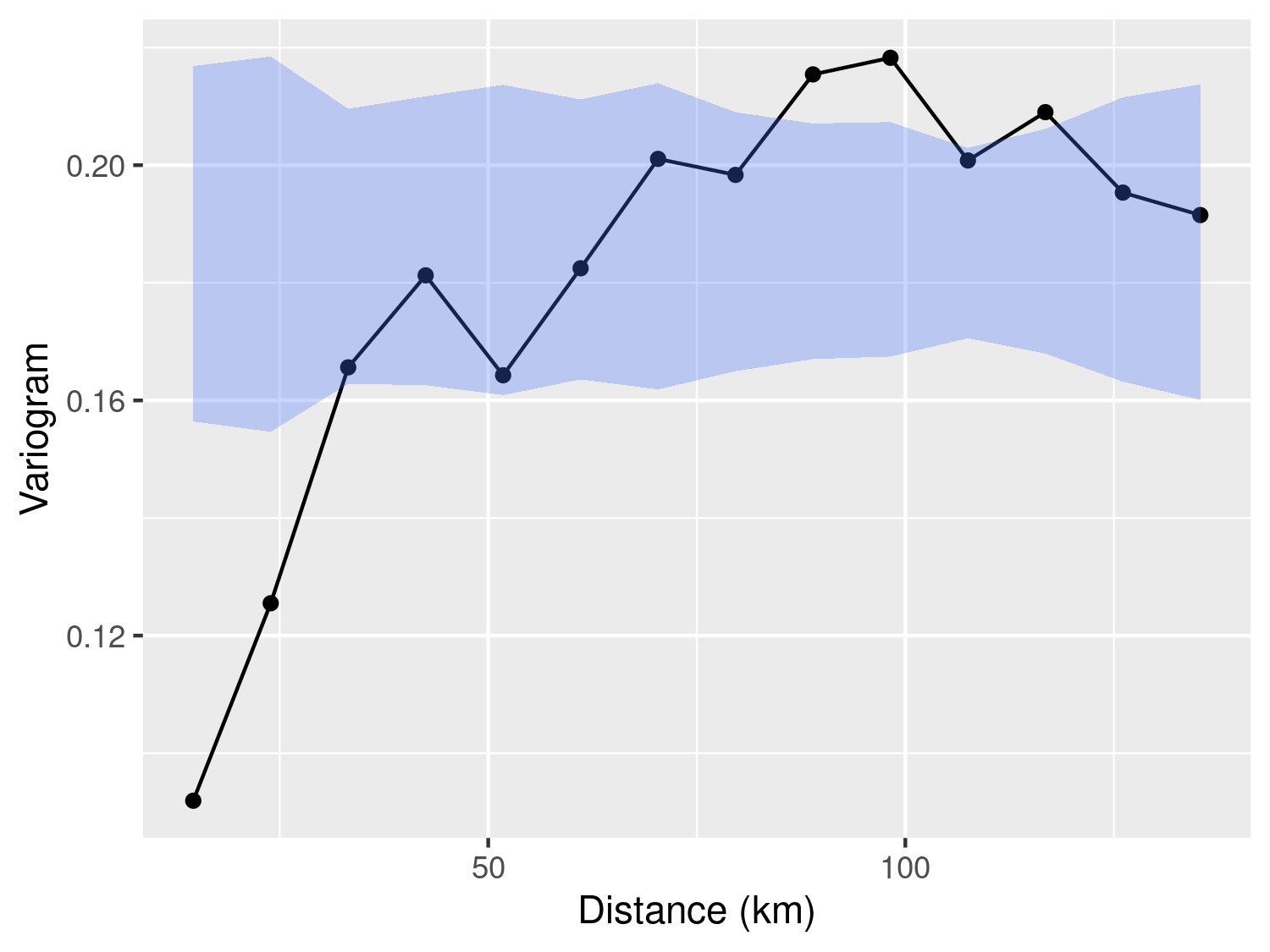

plot_s_variogram(liberia_variog,

plot_envelope = TRUE)

Finally, the plot_s_variogram function enables us to visualize the empirical variogram and, through the plot_envelope argument, include the envelope generated by the permutation procedure. As illustrated in Figure 3.7, we observe that the empirical variogram falls outside the envelope at relatively short distances, typically below 30km. However, for distances exceeding 30km, the behavior of the empirical variogram does not significantly differ from variograms generated under the assumption of spatial independence. In summary, we interpret the evidence presented in Figure 3.7 as indicative of residual spatial correlation within the data. Nevertheless, it is essential to exercise caution when attempting to ascertain the scale of the spatial correlation using the empirical variogram. As we will emphasize throughout this book, the empirical variogram’s sensitivity to the choice of bins values renders it an unreliable tool for drawing statistical inferences. In other words, we advocate employing the empirical variogram primarily to assess the presence of residual correlation.

When using a linear model to assess spatial correlation, it is important to distinguish two cases: 1) when the data contain only one location per location; 2) when more than one observation per location is available. We now consider each of these two scenarios separately.

To illustrate the use of the variogram in this context, we consider the Galicia data on lead concentration in moss samples. The simplest possible model for these data is a standard linear model without covariates, which assumes independence among observations: \[ Y_i = \beta_0 + U_i, \] {#eq-std-lm-galicia} where the \(U_i\) are independent Gaussian random variables with mean zero and variance \(\omega^2\). Our goal at this stage is to assess whether this assumption of independence is supported by the data, or whether there is evidence of spatial correlation.

However, measurement error from the sampling device may contribute to the observed variability in \(Y_i\). If such error is large relative to the underlying spatial variation, it can obscure the detection of spatial correlation in the residuals. One might therefore consider introducing an additional, location-specific random effect, say \(Z_i\), with mean zero and variance \(\tau^2\), leading to the model \[

Y_i = \beta_0 + Z_i + U_i,

\] {#eq-std-lm2-galicia} where \(Z_i\) represents spatially structured variation due to unmeasured covariates, and \(U_i\) represents unstructured variation, such as measurement error. However, this model is not identifiable because the two random effects, \(Z_i\) and \(U_i\), both contribute additively to the total variance, making it impossible to separate their effects unless one of two conditions is met:

(a) the measurement error variance \(\omega^2\) is known from external information, or

(b) multiple measurements are available at each location, allowing the two sources of variation to be estimated separately.

We will explore the latter scenario in the next section.

For the Galicia data, we do not know the measurement device precision and we only have one observation per location. However, this does not prevent us from using the variogram based on the residuals from ?eq-std-lm-galicia, while keeping in mind the limitations and uncertainty that are inherent to this exploratory tool as remarked at the end of the last paragraph.

# Fitting of the linear model and extraction of the residuals

lm_fit <- lm(log(lead) ~ 1, data = galicia)

galicia$residuals <- lm_fit$residuals

# Convert the galicia data frame into an "sf" object

galicia_sf <- st_as_sf(galicia, coords = c("x", "y"), crs = 32629)

# Compute the variogram, using the residuals from the linear model fit,

# and the 95% confidence level envelope for spatial independence

galicia_variog <- s_variogram(galicia_sf, variable = "residuals",

scale_to_km = TRUE,

bins = seq(10, 140, length = 15),

n_permutation = 10000)

# Plotting the results

plot_s_variogram(galicia_variog, plot_envelope = TRUE)

In the code above, we first fit the linear model in ?eq-std-lm-galicia and then extract the residuals from this. Note that for this simple model, the residuals of the model are obtained by simply centering the outcome to zero by subtracting its mean.

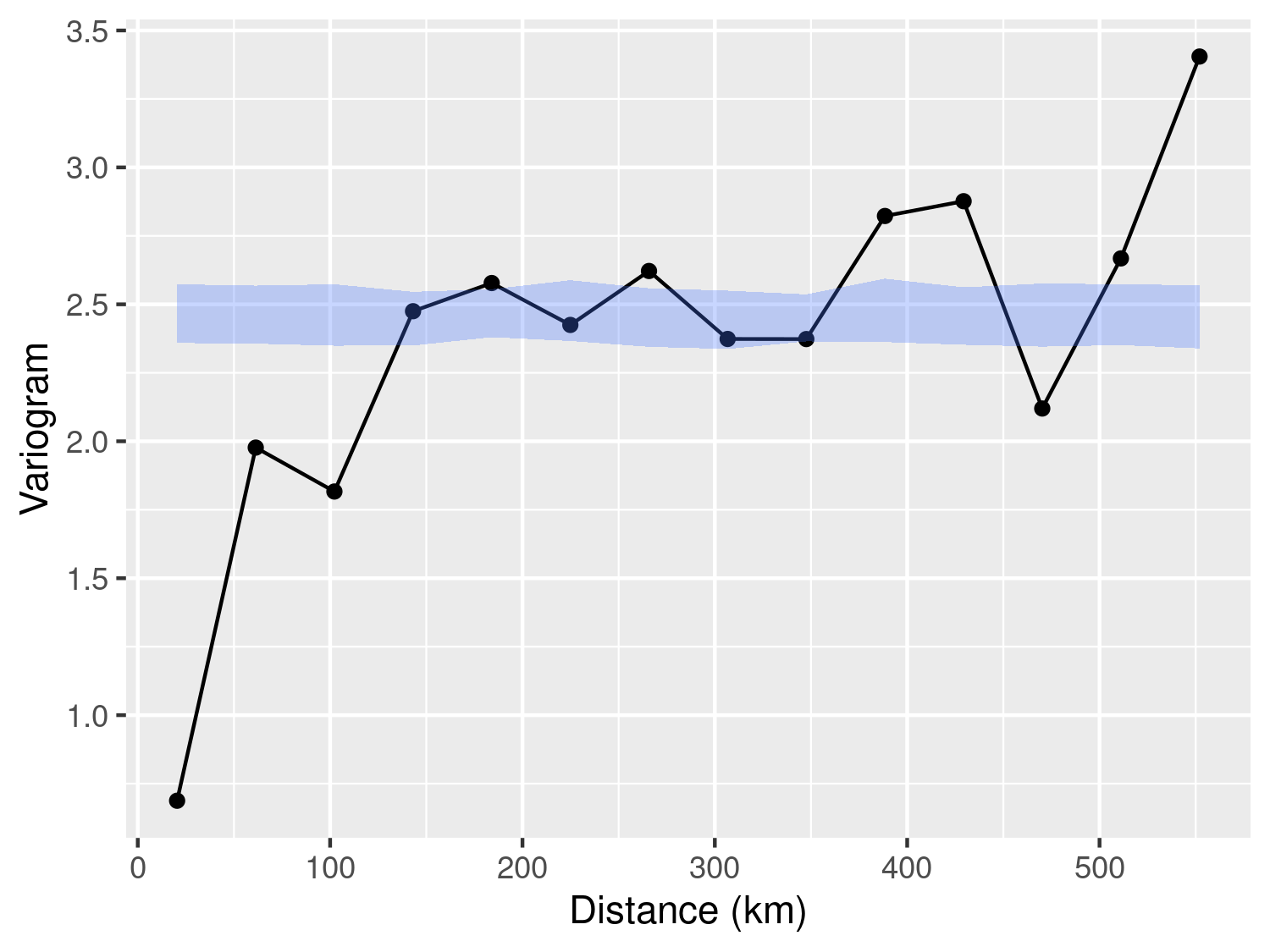

Figure 3.8 shows the empirical variogram and the 95% envelope for spatial independence. This clearly shows that the measurements of lead concentration are spatially correlated.

For the case of more than one observation per location, we shall consider the italy_sim data-set. This data-set contains 10 observations at each of 200 locations. The variable ID_loc is a numeric indicator that can be used to identify the location each observation belongs to. For this data-set, we use the population density, named pop_dens, as a log-transformed covariate; we leave you as an exercise to assess whether it is a reasonable modelling choice.

To specify a non-spatial mixed model for the outcome, we then use two subscripts: \(i\) to identify a given location; \(j\) to identify the \(j\)-th observation for a given location \(i\). We denote as \(Z_i\) the location-specific random effect and as \(U_{ij}\) the random variation due to the measurement error inherent to each observation. Hence, we write \[

Y_{ij} = \beta_0 + \beta_1 \log\{d(x_i)\} + Z_i + U_{ij},

\tag{3.14}\] where \(d(x_i)\) is the population density at location \(x_i\); as before, we use \(\tau^2\) and \(\omega^2\) to denote the variances of \(Z_i\) and \(U_{ij}\), respectively. To fit this model to the data we use the lmer function from the lme4 package.

# Fitting a linear mixed model to the italy_sim data-set

# See main text for model specification

lmer_fit <- lmer(y ~ log(pop_dens) + (1|ID_loc), data = italy_sim)

summary(lmer_fit)

## Linear mixed model fit by REML ['lmerMod']

## Formula: y ~ log(pop_dens) + (1 | ID_loc)

## Data: italy_sim

##

## REML criterion at convergence: 3390.9

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -2.85209 -0.64198 -0.01832 0.66014 3.01870

##

## Random effects:

## Groups Name Variance Std.Dev.

## ID_loc (Intercept) 2.5006 1.5813

## Residual 0.1959 0.4426

## Number of obs: 2000, groups: ID_loc, 200

##

## Fixed effects:

## Estimate Std. Error t value

## (Intercept) -0.40448 0.57613 -0.702

## log(pop_dens) 1.33798 0.07643 17.506

##

## Correlation of Fixed Effects:

## (Intr)

## log(pp_dns) -0.981

# Incorporating the estimated random effects into the data

italy_sim$rand_eff <-ranef(lmer_fit)$ID_loc[italy_sim$ID_loc,1]

# Converting the italy_sim data frame into an "sf" object

italy_sim_sf <- st_as_sf(italy_sim, coords=c("x1", "x2"), crs = 32634)

# Compute the variogram, using the random effects from the linear mixed model fit,

# and the 95% confidence level envelope for spatial independence

italy_sim_variog <- s_variogram(italy_sim_sf, variable = "rand_eff",

scale_to_km = TRUE,

n_permutation = 200)

# Plotting the results

plot_s_variogram(italy_sim_variog, plot_envelope = TRUE)

In the code above, the introduction of the \(Z_i\) random effect is specified in the lmer function though (1|ID_loc) in the formula; recall that ID_loc is the numerical indicator that identifies each location. From the summary of the model, we obtain both the estimates of the regression coefficients \(\beta_{0}\) and \(\beta_{1}\), as well for the variances \(\tau^2\) listed under Random effects:. The estimate of \(\sigma^2\) is found on the line of printed output starting with ID_loc, whilst that for \(\omega^2\) is next to Residual.

The application of the empirical variogram, whose results are shown in Figure 3.9, indicate the presence of residual correlation. This is because we observe that the solid line representing the empirical variogram falls outside of the 95% envelope for spatial independence.

In this section, we consider spatially referenced outcomes \(Y_i\) that are continuous. We first consider the simpler case of a single measurement \(Y_i\) per location \(x_i\). Recalling the class of generalized linear models introduced in Section 1.5, the linear predictor takes the form \[ \mu_{i} = d(x_i)^\top \beta + S(x_i). \tag{3.15}\] Hence, in this case, we interpret \(\beta\) as the effect on \(\mu_i\) for a unit increase in \(d(x_i)\). Let \(U_i\) denote i.i.d. random variables representing the measurement error associated with \(Y_i\), each having mean zero and variance \(\omega^2\). Thanks to the linear properties of Gaussian random variables, we can also express the linear model in a compact expression, as \[ Y_i = \mu_i + U_i = d(x_i)^\top \beta + S(x_i) + U_i. \tag{3.16}\] To fully specify a geostatistical model for our dataset, we must address two critical aspects.

As illustrated in the previous sections, the initial step of exploratory analysis allows us to handle the first aspect, where we determine the regression relationship between covariates and the mean value \(\mu_i\). However, based on existing methods of exploratory analysis, it is difficult to understand what is a suitable correlation function at this stage. A commonly recommended starting point is the Matern correlation function (see Equation 1.5), which offers considerable flexibility in capturing a wide range of correlation structures, under the assumption of stationarity and isotropy. As we shall illustrate in the next example, even estimating a Matern correlation function is a task that poses many inferential challenges due to the poor identifiability, especially, of its smoothness parameter \(\kappa\).

In this section we analyse the Galicia data using a linear geostatistical model for the log-transformed lead concentration, which we denote as \(Y_i\). Since we do not use covariates, we then write the model as \[ Y_i = \mu + S(x_i) + U_i \tag{3.17}\] where were \(S(x)\) is a Matern process with variance \(\sigma^2\), scale parameter \(\phi\) and and smoothness parameter \(\kappa\); the \(U_i\) correspond to the measurement error term and denote with \(\omega^2\) their variance.

We carry out the parameter estimation of the model using the glgpm function from the RiskMap package. This function implements maximum likelihood estimation for generalized linear mixed models using a Matern correlation function, while fixing the smoothness parameter \(\kappa\) at prespecified value by the user. The object passed to the argument data in glpm can either be a data.frame object or an sf object. Below we illustrate the use of glgpm while distinguishing between these two cases.

# Parameter estimation when the argument passed to `data` is a data-frame

fit_galicia <-

glgpm(log(lead) ~ gp(x, y, kappa = 1.5), data=galicia, family = "gaussian",

crs = 32629, scale_to_km = TRUE, messages = FALSE)The code above shows the use of glgpm by passing galicia as a data.frame object to data. The specification of the Gaussian process \(S(x)\) is done through the addition of the term gp() in the formula. The function gp allows you to specify the columns of the coordinates in the data, in this case x and y, the smoothness parameter through the argument kappa, set to 1.5 in this example. In the help page of gp, you can see that by default the nugget term (denoted in this book by the random variable \(Z_i\)) is excluded from the model by default; to include and estimate the variance parameter of the nugget, you should set nugget=NULL in the gp() function. However, doing so for a linear model that only has one observation per location will generate an error message as this is a non-identifiable model for the same reasons given in Section 3.1.3.2.2. However, if the measurement error variance is known this can be fixed by the user using the argument fix_var_me and the inclusion of the nugget term is then possible (to better understand this point, try Exercise 7 at the end of this chapter).

The argument crs is used to specify the coordinate reference system (CRS) of the data. For the Galicia data, as well as for every other data-set used in this book, the CRS is reported in the help page description of the data-set. If crs is not specified, the function will assume that the coordinates are in longitude/latitude format and will use these without applying any transformation. Finally, the argument scale_to_km, is used to specify whether the distances between locations should be scaled to kilometers or maintained in meters; this argument will not affect the scale, and thus the interpretation of the spatial correlation parameter \(\phi\).

The code above shows the alternative approach to estimate the model, when the argument passed to data is an sf object. In this case, the data-set galicia is converted into an sf object before the use of the glgpm function using st_as_sf. When when then fit the linear geostatistical model with galicia_sf, the only differences with the previous chunk of code that used galicia instead, is that the coordinates names in gp() and the crs argument in glgpm do not need to be specified as they are both directly obtained from galicia_sf.

summary(fit_galicia)

## Call:

## glgpm(formula = log(lead) ~ gp(x, y, kappa = 1.5), data = galicia,

## family = "gaussian", crs = 32629, scale_to_km = TRUE, messages = FALSE)

##

## Linear geostatistical model

## Link: identity

## Inverse link function = x

##

## 'Lower limit' and 'Upper limit' are the limits of the 95% confidence level intervals

##

## Regression coefficients

## Estimate Lower limit Upper limit StdErr z.value p.value

## (Intercept) 0.707418 0.552762 0.862075 0.078908 8.9651 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Estimate Lower limit Upper limit

## Measuremment error var. 0.0154636 0.0051797 0.0462

##

## Spatial Gaussian process

## Matern covariance parameters (kappa=1.5)

## Estimate Lower limit Upper limit

## Spatial process var. 0.17127 0.13303 0.2205

## Spatial corr. scale 9.02085 7.59521 10.7141

## Variance of the nugget effect fixed at 0

##

## Log-likelihood: 69.03029

## AIC: -130.0606We can then inspect the point and interval estimates for the model through the summary function of the model as shown in the code chunk above. This output is presented in three sections: in the first section, we have the results for the regression coefficients; in the second section, we have the estimate for the variance of the measurement error component, \(\omega^2\) whose point estimate is about 0.015; in the final section, we have the estimates for the parameters of the spatial covariance function, \(\sigma^2\) and \(\phi\) which are about 0.171 and 9.021 (km), respectively. The message Variance of the nugget effect fixed at 0 indicates that the nugget has not been included in the mode; try Exercise 7 to see how this summary changes in the presence of the nugget term.

At this point, you may be wondering, why we have used 1.5 for the value of \(\kappa\) and whether there is a statistical approach to find the most suitable value for this. We show such an approach in the code below. However, before examining the code, we would like to point out an important aspect that relates to how the value of \(\kappa\) can affect the estimate of the measurement error variance \(\omega^2\). As we have shown, in Section 1.5.1, values of \(\kappa\) that are closer to zero will give a rougher and less regular surface for \(S(x)\). In the case of a single observation, when \(\kappa\) is closer to zero, it will thus become increasingly difficult to estimate \(\omega^2\) since the likelihood function may attribute most of the noisiness to \(S(x)\) and rely less on \(U_i\) to explain the unstructured random variation found in the data. As a consequence of this, we can expect that for value of \(\kappa\) closer to zero, the estimates of \(\omega^2\) will also be smaller and, on the contrary, when \(\kappa\) is larger, the estimates of \(\omega^2\) will also increase. The results generated in the below clearly illustrate this point.

# Number of the values chosen for kappa

n_kappa <- 10

# Set of values for kappa

kappa_values <- seq(0.5, 3.5, length = n_kappa)

# Vector that will store the values of the likelihood function

# evaluated at the maximum likelihood estimate

llik_values <- rep(NA, length = n_kappa)

# Vector that will store the maximum likelihood estimates

# of the variance of the measurement error

sigma2_me_hat <- rep(NA, length = n_kappa)

# List that will contain all the geostatistical models fitted for

# the different values of kappa specified in kappa_values

fit_galicia_list <- list()

for(i in 1:n_kappa) {

fit_galicia_list[[i]] <- glgpm(log(lead) ~ gp(x, y, kappa = kappa_values[i]),

data=galicia, family = "gaussian",

crs = 32629, scale_to_km = TRUE, messages = FALSE)

llik_values[i] <- fit_galicia_list[[i]]$log.lik

sigma2_me_hat[i] <- coef(fit_galicia_list[[i]])["sigma2_me"]

}

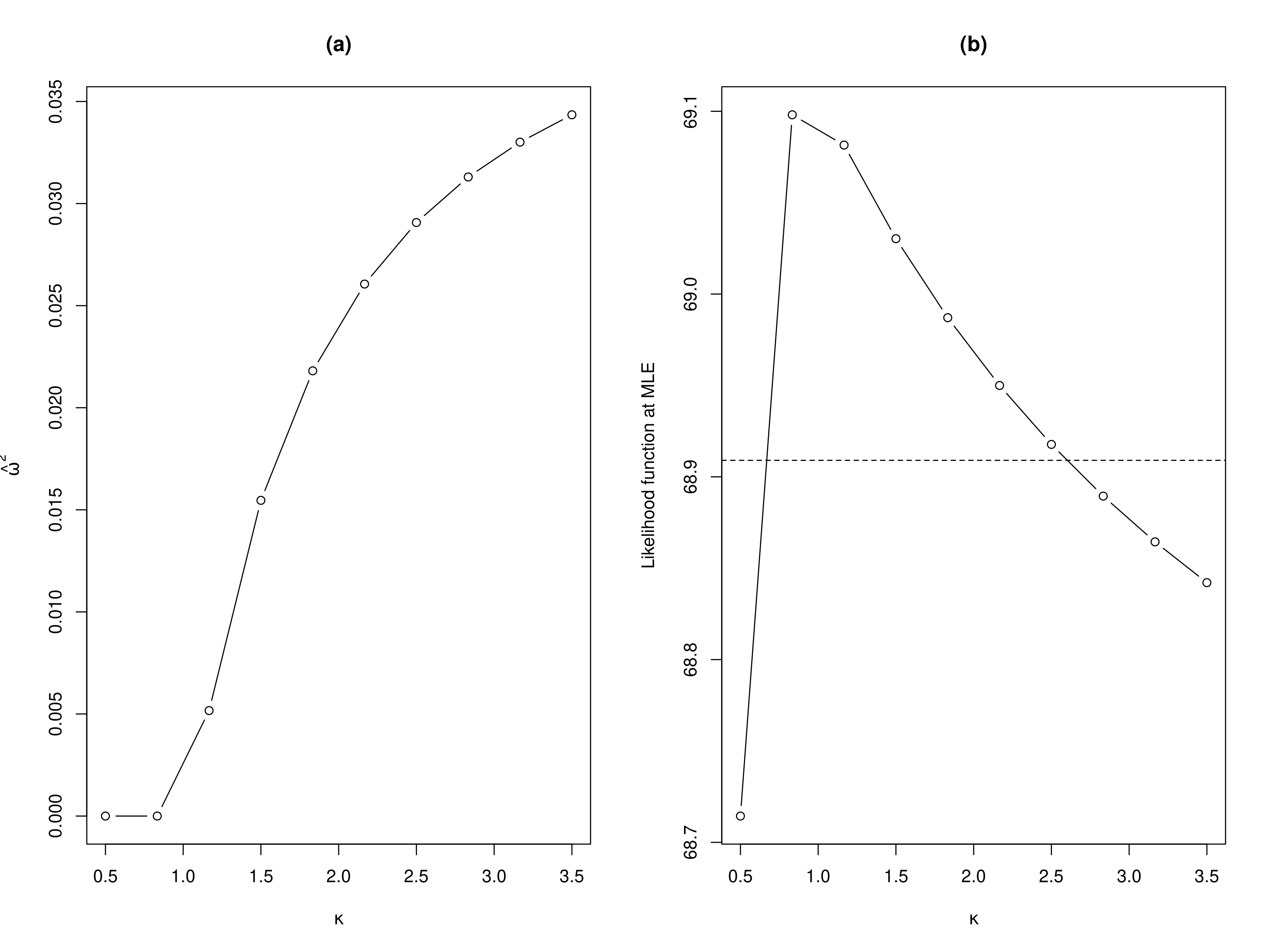

By examining the results shown in panel (a) of Figure 3.10, we observe that, as expected, smaller values for \(\kappa\) leads to smaller point estimates for \(\omega^2\) and viceversa. This begs the question, what should be our chosen value for \(\kappa\)?

To answer this question, a natural approach is to estimate the model for different values of \(\kappa\) and see which one gives the best fit to the data, according to the likelihood function. In panel (b) of Figure 3.10, we show the results of this approach where we considered 10 values for \(\kappa\) within the range 0.5 to 3.5 (see code above). In this plot, we have also added a horizontal line to help us to approximate a range of the most plausible value for \(\kappa\). More precisely, the horizontal dashed line is computed by taking the maximum observed values of the likelihoods computed for the different values of \(\kappa\), say \(\hat{M}\) and we subtract the quantile 0.95 of a \(\chi^2\) distribution with 1 degree of freedom. In R this horizontal line is obtained as

where llik_values is as defined in the previous chunk of code. Note that this approach is essentially constructing the profile likelihood for \(\kappa\) which one could use to derive a confidence interval for \(\kappa\) with a finer segmentation for kappa_values. However, our current objective is not to derive the confidence interval for \(\kappa\), but rather to gain a broad understanding of the \(\kappa\) values supported by the dataset. The values of \(\kappa\) that correspond to likelihood values above the horizontal line are approximately between 0.75 and 2.75. Hence, selecting \(\kappa=1.5\) seems to be a reasonable one in this case. Now, you may be pondering: are there values other than \(\kappa=1.5\) that could fit the data even better? Our answer is that it is not worth the effort to try estimate \(\kappa\) more precisely because it is empirically very difficult and, under some scenarios, even impossible. Estimating \(\kappa\) poses a well-documented challenge in geostatistics (Zhang (2004)), which justifies our adoption of a pragmatic approach that sets it at a predefined value. This issue is also further exacerbated when analyzing count data, which tend to be less informative about the correlation structure than continuously measured data.

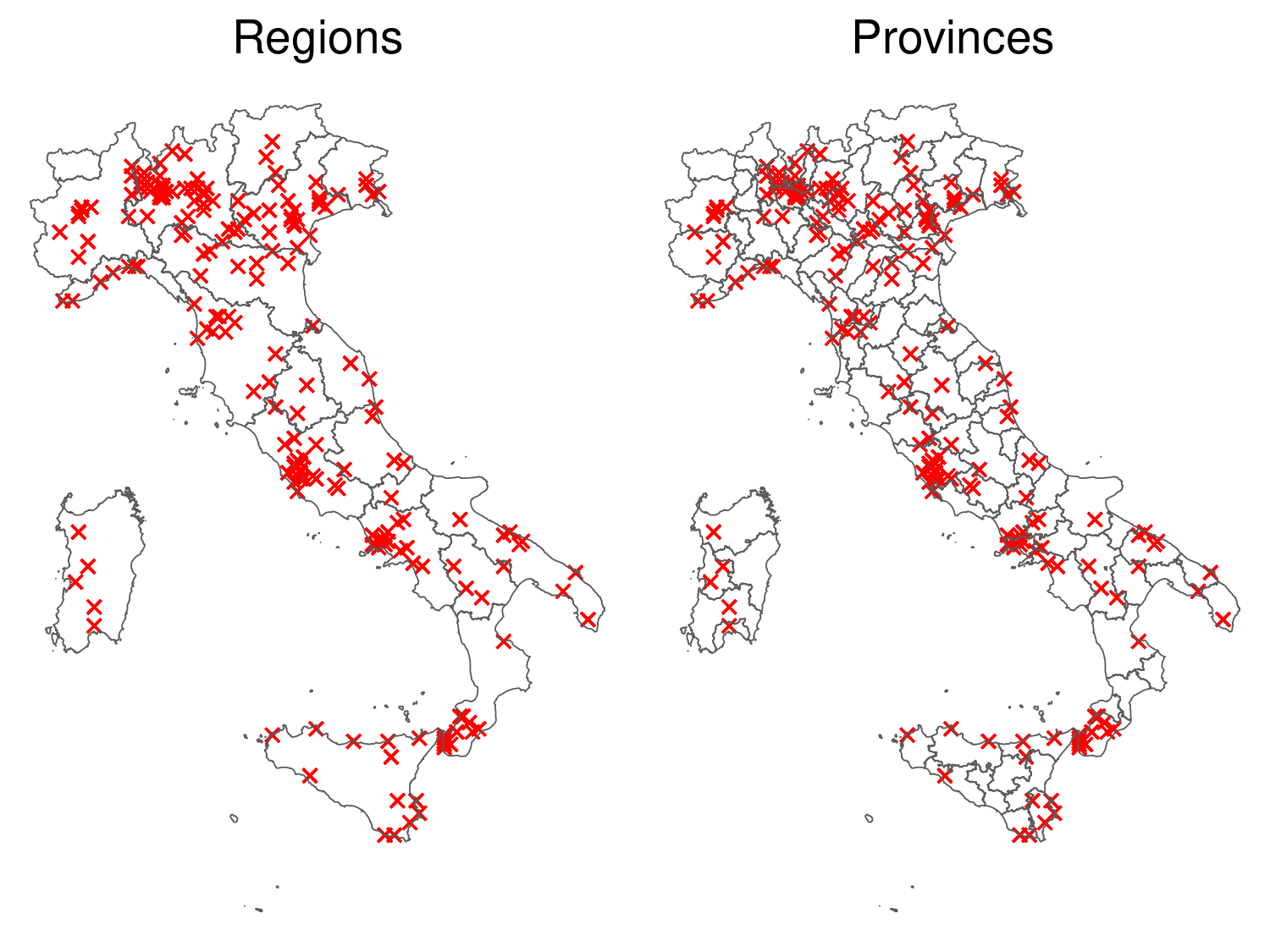

re() functionWe now consider the analysis of geostatistical data with a hierarchical structure and show how to formulate and fit a geostatistical model that accounts for the effects of the different layers of the data. For this purpose, we use the italy_sim where each of the sampled locations can be grouped according to two administrative subdivisions of Italy, regions (Admin level 2) and provinces (Admin level 3), as shown in Figure 3.11.

library(rgeoboundaries)

library(mapview)

italy_regions <- geoboundaries(country = "italy", adm_lvl = "adm2")

italy_provinces <- geoboundaries(country = "italy", adm_lvl = "adm3")par(mfrow = c(1,2))

# Map of the data with the region boundaries

map_regions <- ggplot() +

geom_sf(data = italy_sim_sf, pch = 4, color = "red") +

geom_sf(data = italy_regions, fill = NA) +

theme_void() +

labs(title = "Regions") +

theme(plot.title = element_text(hjust = 1/2))

# Map of the data with the province boundaries

map_provinces <- ggplot() +

geom_sf(data = italy_sim_sf, pch = 4, color = "red") +

geom_sf(data = italy_provinces, fill = NA) +

theme_void() +

labs(title = "Provinces") +

theme(plot.title = element_text(hjust = 1/2))

library(gridExtra)

grid.arrange(map_regions, map_provinces, ncol = 2)

italy_sim (red crosses), with the boundaries of the regions (left) and provinces (right) of Italy.

Let \(V_h\), for \(h=1,\ldots,n_V\) and \(Z_k\), for \(k=1,\ldots,n_{Z}\), be a set of mutually independent Gaussian variables with zero means and variances \(\sigma^2_{V}\) and \(\sigma^2_{Z}\), respectively. Finally let \(\mathcal{A}_h\), for \(h=1,\ldots,n_V\) and \(\mathcal{B}_k\), for \(k=1,\ldots,n_{Z}\), denote the areas encompassed by the boundaries of the region and provinces, respectively. Since we have 10 repeated observations at each locations, we shall use \(Y_{ij}\) to denote the \(j\)-th outcome (found in the data under the column y) at the \(i\)-th location \(x_i\). The model from which we generated data \(y_{ij}\) is \[

Y_{ij} = \beta_0 + \overbrace{\beta_1 d(x_i) + S(x_i)}^\text{Location-level effect} + \underbrace{\sum_{h=1}^{n_{V}} I(x_i \in \mathcal{A}_h) \times V_h}_\text{Region effect} +\\ \overbrace{\sum_{k=1}^{n_{Z}} I(x_i \in \mathcal{B}_k) \times Z_k}^\text{Province effect} + \underbrace{U_{ij}}_\text{Measurement error},

\tag{3.18}\] for \(j=1,\ldots 10\) and \(i=1,\ldots,200\), where, \(d(x_i)\) is the log-transformed population density, \(I(x \in \mathcal{R})\) is an indicator function that takes value 1 if the location \(x\) falls within the boundaries of the area denoted by \(\mathcal{R}\) and 0 otherwise. The covariance function chosen in the generation of the data was an exponential correlation function hence \({\rm cov}\{S(x), S(x') = \sigma^2 \exp\{-||x-x'||/\phi\}\).

The inclusion of the random effects \(V_h\), for the region, and \(Z_k\), for the province, can be included by using the re() function into the formula passed to glgpm as shown below.

# Location-level effect

italy_fit <- glgpm( y ~ log(pop_dens) + gp(kappa = 0.5, nugget = 0) +

# Region and province effects

re(region, province),

data = italy_sim_sf, scale_to_km = TRUE,

family = "gaussian")

## 0: 441.00731: -0.404481 1.33798 0.00000 4.62406 0.00000 0.00000 0.00000

## 1: -130.05497: -0.408825 1.35003 -0.0387110 4.66059 -0.910409 -0.00265292 0.0107965

## 2: -268.13436: -0.865126 1.39525 -0.0305269 5.92133 -1.96079 -0.666581 0.487537

## 3: -329.24560: -1.44091 1.38139 -0.546306 4.98616 -1.67936 -1.15202 0.383821

## 4: -331.20861: -1.38498 1.37256 0.165463 5.61366 -1.63033 -2.11435 0.371263

## 5: -331.28418: -0.874796 1.37322 -0.271990 5.18071 -1.62887 -0.970704 0.371407

## 6: -331.42968: -1.04087 1.37294 -0.119652 5.33634 -1.62883 -1.33321 0.375031

## 7: -331.43375: -1.07312 1.37288 -0.0878211 5.36725 -1.62883 -1.42011 0.376251

## 8: -331.43375: -1.07445 1.37288 -0.0866254 5.36840 -1.62883 -1.42489 0.376323

## 9: -331.43375: -1.07445 1.37288 -0.0866254 5.36840 -1.62883 -1.42489 0.376323

summary(italy_fit)

## Call:

## glgpm(formula = y ~ log(pop_dens) + gp(kappa = 0.5, nugget = 0) +

## re(region, province), data = italy_sim_sf, family = "gaussian",

## scale_to_km = TRUE)

##

## Linear geostatistical model

## Link: identity

## Inverse link function = x

##

## 'Lower limit' and 'Upper limit' are the limits of the 95% confidence level intervals

##

## Regression coefficients

## Estimate Lower limit Upper limit StdErr z.value p.value

## (Intercept) -1.074451 -2.031690 -0.117212 0.488396 -2.2 0.02781 *

## log(pop_dens) 1.372877 1.352018 1.393735 0.010642 129.0 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Estimate Lower limit Upper limit

## Measuremment error var. 1.2167 1.2009 1.2327

##

## Spatial Gaussian process

## Matern covariance parameters (kappa=0.5)

## Estimate Lower limit Upper limit

## Spatial process var. 0.91702 0.59470 1.414

## Spatial corr. scale 214.51980 153.60751 299.587

## Variance of the nugget effect fixed at 0

##

## Unstructured random effects

## Estimate Lower limit Upper limit

## region (random eff. var.) 0.24053 0.14553 0.3976

## province (random eff. var.) 0.37632 0.35607 0.3977

##

## Log-likelihood: 331.4338

## AIC: -648.8675In the code above, the factor variables region and province found in italy_sim are passed to re() and these are estimated as unstructured random effects as defined by Equation 3.18. From the summary of the model, we then obtain the parameter and interval estimates as reported in Table 3.1.

| Parameter | Point estimate | Lower limit | Upper limit |

|---|---|---|---|

| \(\beta_0\) | -1.074 | -2.032 | -0.117 |

| \(\beta_1\) | 1.373 | 1.352 | 1.394 |

| \(\sigma^2\) | 0.917 | 0.595 | 1.414 |

| \(\phi\) | 214.520 | 153.608 | 299.587 |

| \(\sigma^2_{V}\) | 0.241 | 0.146 | 0.398 |

| \(\sigma^2_{Z}\) | 1.457 | 1.379 | 1.540 |

| \(\omega^2\) | 0.196 | 0.194 | 0.199 |

The function to_table from the RiskMap package can be used to obtain the Latex or HTML code directly from a fit of the model. Here is an example.

to_table(italy_fit, digits = 3)We now consider the modelling of count outcomes, focusing our attention to the Binomial and Poisson geostatistical models.

The use of Binomial geostatistical model is appropriate whenever the reported counts have a known upper bound. For example, when analyzing aggregated disease counts from cross-sectional survey data, the total number of people sampled at a location defines the largest number of possible reported disease cases. Recalling the model specification of Table 1.3, we specify the Binomial geostatistical model by stating that the reported disease counts \(Y_i\) out of \(n_i\) (the maximum value that \(Y_i\) can take) at location \(x_i\), conditionally on the spatial random effects \(S(x_i)\), follow a Binomial distribution with probability \(p(x_i)\) and logit-linear predictor \[ \log\left\{\frac{p(x_i)}{1-p(x_i)}\right\} = d(x_i)^\top \beta + S(x_i). \tag{3.19}\] In this model, \(p(x_i)\) is commonly understood as representing the prevalence of the disease under consideration, defined as the proportion of individuals with the disease at a specific moment. While \(p(x_i)\) is not strictly a proportion, its interpretation as disease prevalence is justified by the fact that if we were to sample a sufficiently large population at location \(x_i\), the proportion of individuals with the disease would converge closely to the value of \(p(x_i)\).

A Binomial geostatistical model is also used when analyzing data at individual level where the outcome is binary and usually indicates the positive or negative result of a test for a disease under investigation. In this analysis, a mix of individual-level variables and location-level variables could be introduced to model the probability of a positive test. To formally write the model, we now use \(Y_{ij}\) to indicate the binary outcome taking value 1, if the test was positive, and 0, if negative, for the \(j\)-th individual sampled at location \(x_i\). The linear predictor now takes the form \[ \log\left\{\frac{p_{ij}}{1-p_{ij}}\right\} = e_{ij}^\top \gamma + d(x_{i})^\top \beta + S(x_i). \tag{3.20}\] In the model above we have distinguished between covariates \(e_{ij}\), that express the effect on \(p_{ij}\) due to individual traits (such as age and gender), and covariates \(d(x_i)\), that instead quantify the effect on \(p_{ij}\) resulting from the environmental attributes of location \(x_i\) (such as elevation, temperature, and precipitation). In this model \(p_{ij}\) – the probability of a positive test – once again can be interpreted as the disease prevalence for a population characterized by the individual traits \(e_{ij}\) and residing at location \(x_i\).